Overpowered: The Fine Mess We’ve Gotten Ourselves Into

Want to see Richard’s video commentary for this chapter (and get access to webinars too)? Upgrade your Power package.

… [T]he energetic metabolism of our species has grown in size to be comparable in magnitude to the natural metabolic cycles of the terrestrial biosphere. This feature underlies almost all environmental challenges we face in the 21st century, ranging through resource depletion, overharvesting of other species, excessive waste products entering into land, oceans and atmosphere, climate change, and habitat and biodiversity loss.

—Yadvinder Malhi, Is the Planet Full?

Earth will be monetized until all trees grow in straight lines, three people own all seven continents, and every large organism is bred to be slaughtered.

―Richard Powers, The Overstory

We often think of the downside of power just in terms of its abuses—people using power with evil intent. There are many ways of abusing power, and the fields of clinical psychology, political science, and law are largely concerned with understanding and remedying abuses of social power. However, in this chapter I will focus primarily on power dilemmas that are less frequently discussed, but that may be even more important for us to understand, especially in the 21st century: problems that occur when sheer amounts of physical power overwhelm natural systems, and when concentrated vertical social power threatens individual and collective human well-being.

The problems of the abuse of power and of over-empowerment are not mutually exclusive. Indeed, especially in complex societies, over-empowerment often encourages the abuse of power. But the dilemma of too much power is not confined to the social arena, and may be easier to understand, at least in principle, by way of a few examples that have little to do with human relations.

Electrical engineers are well acquainted with the problem of too much power. An electrical power overload—in which electricity flows through wires that are too small to handle the current—can cause a fire. Similarly, physicists know that, while fuels and batteries store energy for useful purposes, when that energy is released too quickly an explosion can result.

Earth is constantly bathed in 174 petawatts of power from the sun (a petawatt is one quadrillion watts). That solar power drives weather and life on our planet; everything beautiful and pleasant in our lives traces back to it. But sometimes this power moves through Earth’s systems in a destructive surge. Storms cause the quick release of enormous amounts of energy. The wildfires in Sonoma County, California, where I live, can burn with many gigawatts of power. A gigawatt of electrical power that’s controlled via power lines, transformers, and circuits can supply light, heat, and internet connections to a small-to-medium-sized city. A gigawatt of radiative power unleashed in a firestorm can torch that same community in just a few hours.

The same essential principle holds with social power. Cooperation, the basis of social power, gives us the means to accomplish wonders. But social power, channeled through elaborate economic systems featuring various forms of debt and investment, can also enable the pooling of immense amounts of wealth in just a few hands, resulting in needless widespread poverty and eventual civil unrest. That same social power, released in a sudden burst, can lead to the deaths of millions of people through war or genocide.

In this chapter I will summon evidence to make the case that we humans have, in many instances, amassed too much physical power and vertical social power for our own good, and that we are overpowering natural systems and social systems with that power, creating conditions potentially leading to unnecessary human mortality and suffering, as well as ecological disaster, on a massive scale.

Power is essential; without it, we would be literally powerless. But one can have too much of a good thing. How much power is enough? How much is too much?

The best way to judge how much power is too much is by assessing the consequences of its usage, taking care to untangle (as much as possible) issues caused by abuse of power from those resulting simply from the concentration or proliferation of too much of it. Here’s a telling example: our ability to control fire has done us immense good over the millennia. But today nearly everything we do is associated with a little fire somewhere, perhaps in a factory or a power plant or the engine of our car. Engineers have taken great care, over the past few decades, to increase the efficiency of engines and furnaces, especially in the industrialized countries that do most of the burning. But each of those fires still inevitably emits carbon dioxide, and all these billions of fires added together are giving off enough CO2 to imperil life on the planet. Fire is good; but too much fire, even intelligently controlled fire, can be very, very bad. (I’ll discuss climate change in more depth below.)

We are about to survey evidence that humanity as a whole is over-empowered, evidence that consists of harmful consequences already unfolding. To make a logically consistent case, I will show that these consequences are not merely random bad things happening. Difficulties, even tragedies, are inevitable parts of life; and war, famine, and inequality have long histories. I will show what’s different today, focusing on impacts that are of a scale far larger than those occurring previously, that could result in severe injury for massive numbers of humans and in some cases the entire biosphere, and that are clearly traceable to humanity’s recently enhanced powers. In addition, in each section below, we will see why solutions to our deepening problems cannot be achieved just by exposing and ending abuses of power, but are instead necessarily contingent on a reduction, or reining in, of specific human powers.

Climate Chaos and Its Remedies

Climate change is the biggest environmental crisis humans have ever faced, and it is probably the most crucial nexus of current issues concerning physical and social power. Let’s briefly explore the problem in general terms; then we’ll see why it is a result of too much power and why it can be solved only by limiting and reducing our power.

Climate change is primarily (but not solely) caused by human-produced greenhouse gases. The main greenhouse gases and their main sources are:

- carbon dioxide, released from fossil fuel combustion;

- methane, released by farm animals and by the natural gas industry (natural gas is essentially methane); and

- nitrous oxide, released by agriculture and fossil fuel combustion.

We contribute to climate change when we burn coal or natural gas for electricity, or oil for transportation, thereby releasing carbon dioxide and nitrous oxide. The cattle we raise for food emit methane. Industrial agriculture and timber harvesting release carbon stored in soils and vegetation into the atmosphere as carbon dioxide.

At the dawn of the industrial age, the carbon dioxide content of Earth’s atmosphere was 280 parts per million. Today it’s over 420 ppm and rising fast. Greenhouse gases trap heat in the atmosphere, causing the overall temperature of Earth’s surface to increase. It has risen by over one degree Celsius so far; it is projected to rise as much as five degrees more by the end of this century.

Now, a few degrees may not sound like much, and in the middle of winter it might even sound comfortable. But the planet’s climate is a highly complex system. Even slight changes in global temperatures can create ripple effects in terms of weather patterns and the viability of species that have evolved to survive in particular conditions.

Moreover, climate change does not imply a geographically consistent, gradual increase in temperatures. Different places are being affected in different ways. The American southwest will likely be afflicted with longer and more severe droughts. At the same time, a hotter atmosphere holds more water, leading to far more severe storms and floods in other places. Melting glaciers are causing sea levels to rise, leading to storm surges that can inundate coastal cities, placing hundreds of millions of people at risk.

As carbon dioxide emissions are raising the planet’s temperature, they are also being absorbed by the Earth’s oceans, increasing the water’s acidity and imperiling tiny ocean creatures at the bottom of the aquatic food chain. Because the oceans are also being impacted by other kinds of pollution (nitrogen runoff and plastics) and are being overfished, some scientists now warn that entire marine ecosystems are at risk this century. To put it as plainly as possible, the oceans are dying.

Since the 1990s, the United Nations has sought to rally the world’s nations to reduce greenhouse gas emissions. After 21 years of international meetings and failed negotiations, 196 nations agreed in December 2015 on a collective goal of limiting the temperature increase above pre-industrial levels to 2 degrees Celsius (3.6 degrees Fahrenheit) with the ideal being 1.5 degrees or less. But actual emissions have so far not been capped.

Some of the difficulty in reaching consensus on climate action comes from the fact that most historic emissions came from the world’s financially and militarily powerful nations, which continue burning fossil fuels at high rates; while some poorer and less powerful nations, which have less historic responsibility, are rapidly increasing their fossil fuel consumption in order to industrialize. Some of the poorest nations are also the ones most vulnerable to climate change. All of this makes it hard to agree who should reduce emissions and how fast.

Sidebar 20: Personal Carbon Output

There are many areas of uncertainty in climate science, even though the basic mechanism of global warming is clear. So far, in many cases actual ecosystem impacts have come faster than scientists have forecast, but we don’t know whether future impacts will adhere more closely to experts’ models or continue to outstrip them. Also, there are uncertainties about how best to account for, and assign responsibility for, greenhouse gas emissions: for example, if China burns coal to produce goods for American shoppers, should the resulting emissions be credited to China or the United States? How should we count emissions from shipping and aviation (which are usually omitted from national greenhouse gas accounts)?

There is controversy as well about how we might capture carbon from the atmosphere and sequester it for a long time. As the threats of the climate crisis become increasingly clear and our remaining “carbon budget” (the amount we can emit before crossing dangerous thresholds) shrinks, ever more attention is being paid to what are called “negative emissions technologies.” But most of these technologies—with the exception of natural ways to sequester carbon in soils and trees—are theoretical, unproven, or unscalable.

Altogether, climate change poses enormous challenges, including these:

- How can we rapidly reduce the use of fossil fuels without experiencing unacceptable consequences of economic contraction?

- How can we reduce the use of fossil fuels fairly?

- How can we protect nations and communities that are most vulnerable to climate change?

- Can we take carbon out of the atmosphere and store it so as to reduce the severity of climate change?

The difficulty of the project of reducing emissions is illustrated by the example of California. That state’s climate pollution declined by just 1.15 percent in 2017, according to the California Green Innovation Index.2 (For the US as a whole, greenhouse gas emissions fell less than one percent in 2017, but rose 3.4 percent in 2018.) At the 2017 rate, California won’t reach its 2030 decarbonization goals (cutting emissions to 40 percent below 1990 levels) until 2061, or its 2050 targets (80 percent below 1990 levels) until 2157. The state has pioneered cap-and-trade legislation and ambitious fuel economy standards, among many other climate-friendly policies and initiatives. If California will be up to a century late in meeting its climate goals, what does this say for the rest of the nation, and the world as a whole? Further, it must be noted that California understandably undertook the easiest and lowest-cost actions first; as time goes on, further increments of carbon reduction will tend to be more expensive and politically fraught. Clearly, meeting global climate targets (“net zero” emissions by 2050 to limit warming to 1.5 degrees C, or by 2070 to stay below 2 degrees C) will require vastly more effort than is currently under way.

Climate change is often presented as a technical pollution issue. In this framing, humanity has simply made a mistake in its choice of energy sources; a solution to climate change entails switching energy sources and building enough carbon-sucking machines to clear the atmosphere of excess CO2. But techno-fixes (that is, technological solutions that circumvent the need for personal, political, or cultural change) aren’t working so far, and likely won’t work in the future. That’s because fossil fuels will be difficult to replace, and energy usage is central to our collective economic power.

Consider this brief thought experiment. Could the Great Acceleration in population and consumption have happened with wind or water power? As we’ve seen, these energy sources were already available at the start of the Acceleration, but they were by themselves inadequate to spark a massive industrialization of production and transport, or to enable a tripling of agricultural output; instead, it was the characteristics of fossil fuels that propelled the dramatic expansion of humanity’s powers. Since we adopted fossil fuels, we have introduced new sources of energy (solar PV panels, giant wind turbines, and nuclear power plants), but we would never have been able to develop them if we hadn’t had access to the power of fossil fuels to enable improvements in metallurgy and a host of other industrial fields that led to our ability to construct solar cells, wind towers, and nuclear reactors.

Moreover, the replacement of fossil fuels with other energy sources faces hurdles. As noted in the last chapter, many energy analysts regard solar and wind as the best candidates to substitute for fossil fuels in power generation (since, as we’ve seen, nuclear is too expensive and too risky, and would require too much time for build-out; and hydro is capacity constrained). But these “renewables” are not without challenges. While sunlight and wind are themselves renewable, the technologies we use to capture them aren’t: they’re constructed of nonrenewable materials like steel, silicon, concrete, and rare earth metals that require energy for mining, transport, and transformation.

Sunlight and wind are intermittent: we cannot control when the sun will shine or the wind will blow. Therefore, to ensure constant availability of power, these sources require some combination of four strategies:

- Energy storage (by way of batteries; or by pumping water uphill when there is sufficient power, so that it can be released later to generate power as it flows back downhill) is useful to balance out day-to-day intermittency, but nearly useless when it comes to seasonal intermittency; also, storing energy costs energy and money.

- Source redundancy (building far more generation capacity than will actually be needed on “good” days), and then connecting far-flung solar and wind farms by way of massive super-grids, is a better solution for seasonal intermittency, but requires substantial infrastructure investment.

- Excess electricity generated at times of peak production can be used to make synthetic fuels (such as hydrogen, ammonia, or methanol), perhaps using carbon captured from the atmosphere, as a way of storing energy; however, making large amounts of such fuels will again require substantial infrastructure investment, and the process is inherently inefficient.

- Demand management (using electricity when it’s available, and curtailing usage when it isn’t) is the cheapest way of dealing with intermittency, but it often requires behavioral change or economic sacrifice.

Today the world uses only 20 percent of its final energy in the form of electricity. The other 80 percent of energy is used in the forms of solid, liquid, and gaseous fuels. A transition away from fossil fuels will entail electrification of much of that other 80 percent of energy usage, which includes transportation and industrial processes. Many uses of energy, such as aviation and high-heat industrial processes, will be difficult or costly to electrify. In principle, the electrification conundrum could be overcome using synfuels. However, doing this at scale would require a massive infrastructure of pipelines, storage tanks, carbon capture devices, and chemical synthesis plants that would essentially replicate much of our current natural gas and oil supply system.

Machine-based carbon removal and sequestration methods work well in the laboratory, but would need staggering levels of investment in order to be deployed at a meaningful scale, and it’s unclear who would pay for them. The best carbon capture-and-sequestration responses appear instead to consist of various methods of ecosystem restoration and soil regeneration. These strategies would also reduce methane and nitrous oxide emissions. But they would require a near-complete rethinking of food systems and land management.

Recently I collaborated with a colleague, David Fridley of the Energy Analysis Program at Lawrence Berkeley National Laboratory, to look closely at what a full transition to a solar-wind economy would mean (our efforts resulted in the book Our Renewable Future).3 We concluded that it will constitute an enormous job, requiring many trillions of dollars in investment. In fact, the task may be next to impossible—if we attempt to keep the overall level of societal energy use the same, or expand it to fuel further economic growth.4 David and I concluded:

We citizens of industrialized nations will have to change our consumption patterns. We will have to use less overall and adapt our use of energy to times and processes that take advantage of intermittent abundance. Mobility will suffer, so we will have to localize aspects of production and consumption. And we may ultimately forgo some things altogether. If some new processes (e.g., solar or hydrogen-sourced chemical plants) are too expensive, they simply won’t happen. Our growth-based, globalized, consumption-oriented economy will require significant overhaul.5

As we’ve seen throughout this book, energy transitions are never merely technical; they are transformative for society. The end of fossil fuels—even in the best case, with forethought and proactive investment—will result in cascading disruptions to every aspect of daily existence.

The essence of the problem is this: nearly everything we need to do to solve the climate problem (including building new low-emissions electrical generation capacity, and electrifying energy usage) requires energy and money. But society is already using all the energy and money it can muster in order to do the things that society wants and needs to do (extract resources, manufacture products, transport people and materials, provide health care and education, and so on). If we take energy and money away from those activities in order to fund a rapid energy transition on an unprecedented scale, then the economy will contract, people will be thrown out of work, and many people will be miserable. On the other hand, if we keep doing all those things at the current scale while also rapidly building a massive alternative infrastructure of solar panels, wind turbines, battery banks, super grids, electric cars and trucks, and synthetic fuel factories, the result will be a big pulse of energy usage that will significantly increase carbon emissions over the short term (10 to 20 years), since the great majority of the energy currently available for the project must be derived from fossil fuels.

It takes energy to make solar panels, wind turbines, electric cars, and new generations of industrial equipment of all kinds. For a car with an internal combustion engine, ten percent of lifetime energy usage occurs in the manufacturing stage. For an electric car, roughly 40 percent of energy usage occurs in manufacturing, and emissions during this stage are 15 percent greater than for an ICE car (over the entire lifetime of the e-car, emissions are about half those of the gasoline guzzler). With solar panels, energy inputs and carbon emissions are similarly front-weighted to the manufacturing phase; energy output and emissions reduction (from offsetting other electricity generation) come later. Replacing a very high percentage of our industrial infrastructure and equipment quickly would therefore entail a historically large burst of energy usage and carbon emissions. By undertaking a rapid energy transition, while also maintaining or growing current levels of energy usage for “normal” purposes, we would be defeating our goal of reducing emissions now (even though we would be working toward the goal of reducing emissions later).

One way to mostly avoid that nasty conundrum would be to slow the transition, replacing old power plants and emissions-spewing cars, planes, ships, trucks, and factories as they wear out with new versions that don’t produce carbon emissions. But the process would take far too long, since much of our existing infrastructure has a multi-decade lifespan, and we need to get to zero net emissions by roughly 2050 to avert unacceptable climate impacts.

Some folks nurture the happy illusion that we can do it all—continue to grow the economy while also funding the energy transition. But that assumes the problem is only money (if we find a way to pay for it, then the transition can be undertaken with no sacrifice). This illusion can be maintained only by refusing to acknowledge the stubborn fact that all activity, including building alternative energy and carbon capture infrastructure, requires energy.

The only way out of the dilemma arising from the energy and emissions cost of the transition is to reduce substantially the amount of energy we are using for “normal” economic purposes—for resource extraction, manufacturing, transportation, heating, cooling, and industrial processes—both so that we can use that energy for the transition (building solar panels and electric vehicles), and so that we won’t have to build as much new infrastructure (since, if our ongoing operational energy usage is smaller, we won’t need as many solar panels and so on). Increased energy efficiency can help reduce energy usage without giving up energy services, but many machines (LED lights, electric motors) and industrial processes are already highly efficient, and further large efficiency gains in those areas are unlikely. We would achieve an efficiency boost by substituting direct electricity generators (solar and wind) for inherently inefficient heat-to-electricity generators (natural gas and coal power plants); but we would also be introducing new inefficiencies into the system via battery-based electricity storage and synfuels production. In the end, the conclusion is inescapable: actual reductions in energy services would be required in order to transition away from fossil fuels without creating a significant short-term burst of emissions. Some energy and climate analysts other than David Fridley and myself—such as Kevin Anderson, Professor of Energy and Climate Change at the University of Manchester—have reached this same conclusion independently.6

As we’ve seen, energy is inextricably related to power. Thus, if society voluntarily reduces its energy usage by a significant amount in order to minimize climate impacts, large numbers of people will likely experience this as giving up power in some form.

It can’t be emphasized too much: energy is essential to all economic activity. An economy can grow continuously only by employing more energy (unless energy efficiency can be increased substantially, and further gains in efficiency can continue to be realized in each succeeding year). World leaders demand more economic growth in order to fend off unemployment and other social ills. Thus, in effect, everyone is counting on having more energy in the future, not less.

A few well-meaning scientists try to avoid the climate-energy-economy dilemma by creating scenarios in which renewable energy saves the day simply by becoming dramatically cheaper than energy from fossil fuels, or by ignoring the real costs of dealing with energy intermittency in solar and wind power generation. Some pundits argue that we have to fight climate change by becoming even more powerful than we already are—by geoengineering the atmosphere and oceans and thus taking full control of the planet, thereby acting like gods.7 And some business and political leaders simply deny that climate change is a problem; therefore, no action is required. I would argue that all of these people are deluding themselves and others.

Problems ignored usually don’t go away. And not all problems can be solved without sacrifice. If minimizing climate change really does require substantially reducing world energy usage, then policy makers should be discussing how to do this fairly and with as little negative impact as possible. The longer we delay that discussion, the fewer palatable options will be left.

The stakes could hardly be higher. If growth in emissions continues, the result will be the failure of ecosystems, massive impacts on economies, widespread human migration, and unpredictable disruptions of political systems. The return of famine as a familiar feature of human existence is a very real likelihood.8

The recent global economic recession resulting from governments’ response to the coronavirus pandemic at least temporarily reduced energy usage and carbon emissions. It was not enough to avert the climate crisis, and no one was happy with the unequally distributed economic pain entailed—the health impacts (suffering and lost lives), the economic sacrifice (lost jobs), and the increasing levels of economic inequality (as the low-paid bore the brunt of both disease and layoffs). A serious, long-term response to the climate crisis will require us to reduce energy usage still further in industrialized nations, and to find ways to share the burden more equitably. It’s difficult to overstate what is at stake: if we don’t adequately address climate change, the fate not just of humans, but of many or even most of the planet’s other species may hang in the balance.

Disappearance of Wild Nature

We humans are accustomed to thinking of the world only in terms of our own wishes and needs. The vast majority of people’s attention is taken up with family, work, politics, the economy, sports, and entertainment—human interests. It has become all too easy to forget that nature even exists, as society has grown more urbanized, and as the processes of growing food, making goods, and producing energy are handled in large industrial operations far from view. There are millions of children in North America who have never seen the stars at night, visited a national park, or picked a wild blackberry, and who have only the vaguest idea where their food, fuel, and electricity come from.

Earth’s wild flora and fauna provide an entertaining stage setting for nature documentaries and tourism, but appear to have little further significance. Yet we humans are part of a living system extending from viruses and bacteria all the way up to redwood trees and whales—one with myriad checks, balances, and feedbacks. We evolved within this living system, and cannot persist without it.

As our human populations and consumption habits grow, we displace other species. We turn wild lands supporting a great variety of animals, plants, and fungi into plowed fields dominated by a single crop. Our greenhouse gas emissions change the climate, reducing habitat still further. And we introduce toxic chemicals into the environment, which work their way up through the food chain all the way to mothers’ breast milk.

Sidebar 21: Rising Risk of Disease and Pandemic

In effect, we humans are exercising interspecies exclusionary power, both purposefully (in the cases of “weed” species, including microbes like the polio virus, which we try to wipe out), and inadvertently (in the case of millions of species that simply get in our way), on a scale likely not seen for the last several hundred million years.

The results are apparent everywhere in declining numbers of species of insects, fish, amphibians, birds, and mammals. It has been estimated that humans—along with our cattle, pigs, and other domesticates—now make up 97 percent of all terrestrial vertebrate biomass. The other three percent are comprised of all the songbirds, deer, foxes, elephants and on and on—all the world’s remaining wild land animals. Meanwhile deforestation and other land uses are wreaking devastation on the world’s plant biodiversity, with one in five plants currently threatened with extinction.

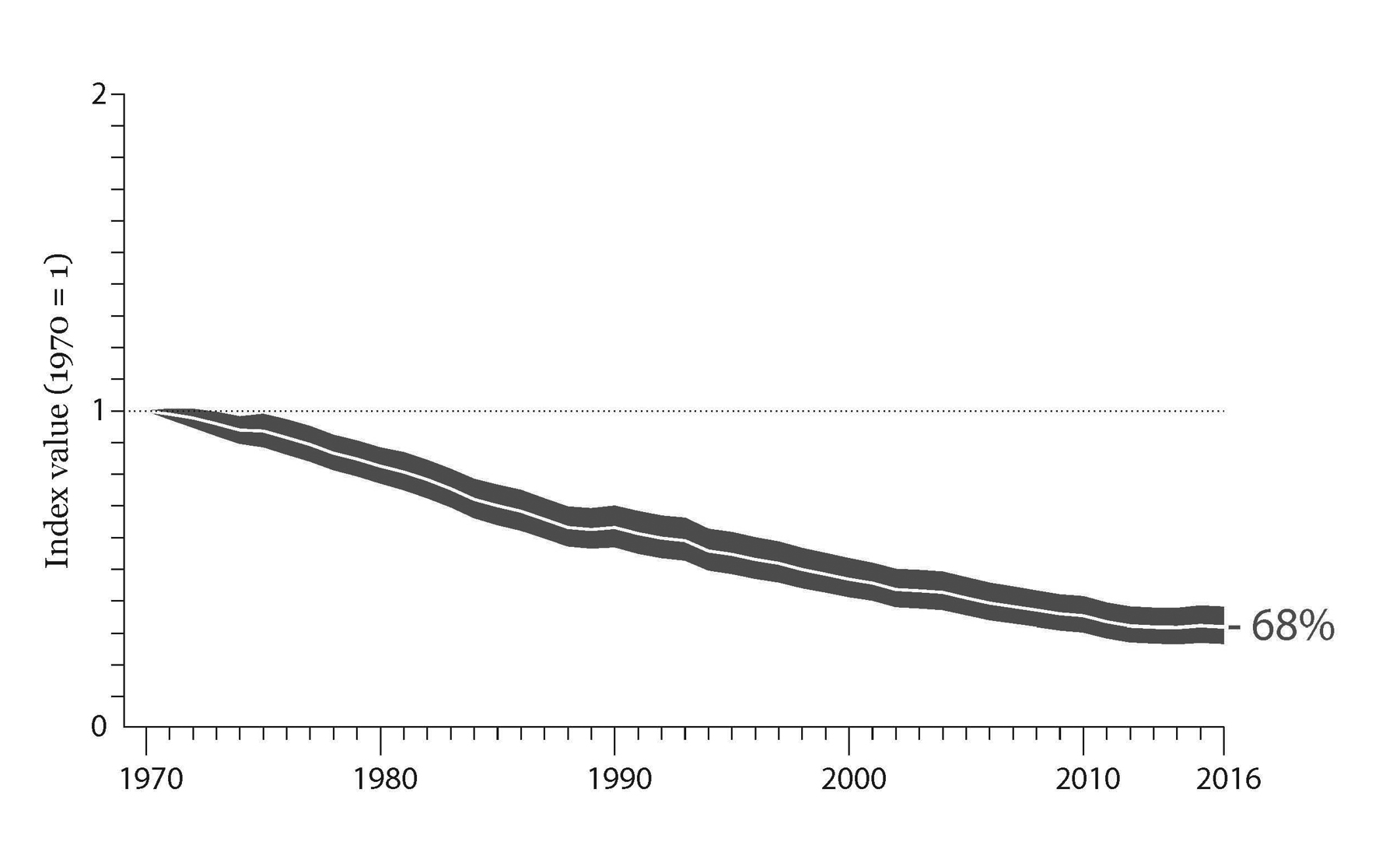

According to the World Wildlife Fund, two-thirds of all wildlife has disappeared in the last 50 years. A third of this loss has resulted from over-exploitation, another third from habitat degradation, and the final third through changes in the environment—which include increasing land use for agriculture and urbanization.10

Figure 5.1 Global wildlife decline (Global Living Planet Index with confidence interval).

Credit: World Wide Fund for Nature, Living Planet Report 2020.

Biological richness is being lost even at the microscopic level. Our use of agricultural chemicals has led to the disappearance from farm soils of bacteria, fungi, nematodes, and other tiny organisms that provide natural fertility. As these microscopic soil communities are destroyed, carbon is released into the atmosphere. Even in the human gut, microscopic biodiversity is on the decline, leaving us more prone to immune disorders, multiple sclerosis, obesity, and other diseases.

Biologists call this widespread, rapid loss of biodiversity the Sixth Extinction. The geological record tells of five previous events when enormous numbers of species perished; the most severe occurred at the end of the Permian period, 250 million years ago, when 96 percent of all species disappeared. Evidently, we are now in the early stages of another massive die-off of species, though not yet on the same scale as those five previous cataclysmic events.

What does loss of biodiversity mean for people? At the very least, it implies that today’s children are set to inherit a world in which many of the animals that filled the lives, dreams, and imaginations of our ancestors, and that provided the metaphors at the root of every human language, will be remembered only in picture books. But biodiversity loss also has enormous practical implications for public health and agriculture.

Among other things, natural systems replenish oxygen in the planetary atmosphere, capture and sequester carbon in soils and forests, pollinate food crops, filter freshwater, buffer storm surges, and break down and recycle all sorts of wastes. As we lose biodiversity, we also lose these ecosystem services—which, if we had to perform them ourselves, would cost us over $30 trillion annually, according to several estimates.

Yet our very tendency to be preoccupied with the human impacts of the biodiversity crisis may tell us a great deal about why it is happening in the first place. Many Indigenous cultures believed that other species have as much right to exist as we do. But as cities, houses, cars, and communication media have come to dominate our attention, we have become ever more self-absorbed. We barely notice as the oceans are emptied of life, or as the sound of birdsong disappears from our lives.

Throughout the world, successful programs for biodiversity protection have centered on limiting deforestation, restricting fishing, and paying poor landowners to protect habitat. An international coalition of scientists, conservationists, nonprofits, and public officials has called for setting aside half the planet’s land and sea for biodiversity protection.11 Biologist Edward O. Wilson’s book Half Earth makes the case for this proposal; Wilson estimates that it would reduce the human-induced extinction rate by 80 percent. It’s a bold idea that faces enormous political and economic obstacles. But unless we do something on roughly this scale, wildlife faces an immense threat, one with a distinctly human face.

Land is a fundamental source of wealth, and wealth is a primary form of social power. Therefore, giving up control of land (with the goal of preserving habitat) in effect means ceding power. Naturally, many people prefer not to give up land and the power that it brings; they would prefer to use even more land for mining and other resource extraction, for agriculture, even for renewable energy projects. Further, as long as the human population is growing, increased land use can always be justified for humanitarian purposes. So, how to avoid the trade-off?

Some people think technology could help. Using CRISPR-Cas9 gene-editing technology, it may now be possible to bring some animals and plants back from extinction. Indeed, ecologists at the University of California, Santa Barbara, have already published guidelines for choosing which species to revive if we want to do the most good for our planet’s ecosystems.12 By establishing a genetic library of existing species, we could give future generations the opportunity to bring any organism back from beyond the brink. Doing so could help restore ecosystems that once depended on these species. For example, mammoths trampling across the ancient Arctic helped maintain grasslands by knocking down trees and spreading grass seeds in their dung. When the mammoths disappeared, grasslands gave way to today’s mossy tundra and snowy taiga, which are melting and releasing greenhouse gases into the atmosphere. By reviving the mammoth, we could help slow climate change by turning the tundra back into stable grasslands.13

Nevertheless, as exciting as it may be to contemplate “Jurassic Park”-like projects reviving long-gone animals like the mammoth or the passenger pigeon, bringing back a few individual plants or animals will be a meaningless exercise if these species have no habitat. Some species cannot reproduce in captivity, and zoo specimens do not perform ecological functions. Many scientists involved in extinct species revival efforts understand the need for habitat, and aim only to revive species that could help restore ecosystems.14 Still, it’s important that we keep our priorities straight: without habitat, the revived species themselves are only ornaments. Habitat protection is the real key to reversing biodiversity loss; species revival is just a potentially useful afterthought. And habitat protection means reducing the potential for humans to extract wealth from Earth’s limited surface area, even if we find ways to treat land and water in ways friendlier to wildlife. Ultimately, we cannot avoid the trade-off between biodiversity and the increasing power of human beings.

Resource Depletion

Imagine you’re at a big party. The host wheels out a tub of ice cream—the giant ones they use at ice cream parlors—and yells, “Help yourselves!” A few people grab scoops, spoons, and bowls from the kitchen and start digging into the frozen top. As they work the tub and it warms up, the ice cream becomes easier to scoop; more people bring spoons and bowls, and ice cream flies out of the tub as fast as people can eat it. When the ice cream is over half gone, it becomes harder to get at; people have to reach in farther to scoop, and they’re bumping in to each other. The ice cream isn’t coming out as fast anymore, and some people lose interest and turn their attention to the cake. Finally, a small group of the most intrepid scoopers are literally scraping the bottom of the barrel, resorting to small spoons to get the last bits of ice cream out.

That’s depletion. The faster you scoop, the sooner you arrive at the point where there is no more left. Like our hypothetical tub of ice cream, Earth’s resources are subject to depletion—but usually the process is a little more complicated.

First, material resources come in two kinds: renewable and nonrenewable.15 Renewable resources like forests and fisheries replenish themselves over time. Harvesting trees or fish faster than they can replenish will deplete them, and if you do that for too long it may become impossible for the species to recover. We’re seeing that happening right now with some global fish stocks.

Nonrenewable resources—like minerals, metals, and fossil fuels—don’t grow back at all. Minerals and metals can often be recycled, but that requires energy, and usually the resources gradually degrade as they’re cycled repeatedly. When we extract and burn fossil fuels, they are gone forever.

Another complicating issue is resource quality. Our hypothetical ice cream is the same from the top of the tub to the bottom. But most nonrenewable resources vary greatly in terms of quality. For example, there are rich natural iron deposits called magnetite in which iron makes up about three-quarters of the material that’s mined; at the other end of the spectrum is taconite, of which only about one-quarter is iron. If you’re looking to mine iron, guess which ores you’ll start with. High-grade resources—the “low-hanging fruit”—tend to be depleted first.

Defining which resources can be considered “high-grade” is also a little complicated. We’ve just discussed ore grades. But there are also issues of accessibility: how deeply is the resource buried? And location: is it nearby, or under a mile of ocean water, or in a hostile country on the other side of the planet? There’s also the issue of contaminants: for example, coal with high sulfur content is much less desirable than low-sulfur coal.

Let’s see how issues of resource quality play out in the case of one of the world’s most precious resources, crude oil.

Modern oil production started around 1860 in the United States, when deposits of oil in Pennsylvania were found and simple, shallow wells were drilled into them. This petroleum was under great pressure underground, and the wells allowed that oil to escape to the surface. Over time, as the pressure decreased, the remaining oil needed to be pumped out. When no more oil could be pumped, the well was depleted and abandoned.

Exploration geologists soon discovered oil in other places: Oklahoma, Texas, and California; and later in other parts of the world, particularly the Middle East. During the century and a half that we humans have been extracting and burning oil, hundreds of thousands of individual oil wells around the world have been drilled, depleted, and abandoned.

Oil deposits are generally too big to be drained from a single well; many wells drilling into the same underground reservoir are called an oilfield. Oilfields are geological formations where oil has accumulated over millions of years. Some are small, others are huge. Smaller oilfields are fairly numerous. The super-giant ones—like Ghawar in Saudi Arabia, which in its glory days of the 1990s yielded nearly ten percent of all the world’s oil on a daily basis—are extremely rare (there are 131 super-giants in total, out of some 65,000 total oilfields). Like individual oil wells, oilfields also deplete over time.

Today, most of the world’s onshore crude oil deposits—often classified as conventional oil—have already been discovered and are in the process of being depleted. The oil industry is quickly moving toward several kinds of unconventional oil—such as the oil sands in Canada, deepwater oil in places like the Gulf of Mexico, and tight oil (also known as shale oil) produced by fracking primarily in North Dakota and Texas. Compared to conventional oil, unconventional oil resources are either of lower quality, or are more challenging to extract or process, or both; therefore, production costs are significantly higher, and so are the environmental impacts and risks.

As fossil fuels are depleted, their energy profitability (the energy returned on energy invested in producing them, or EROEI) generally declines. That means more and more of society’s overall resources have to be invested in producing energy. It also means oil prices are likely to become more volatile. Oil companies have a harder time making a profit, while consumers often find oil less affordable.

During the past century, our transportation systems were built on the assumption that oil prices would remain continually low and supplies would continually grow; meanwhile, the petroleum industry was structured to anticipate low extraction costs. Now that extraction costs are increasing because we’re relying more on unconventional resources, there is no longer an oil price that works well for both producers and consumers. Either the oil price is too high, eating into motorists’ disposable income, thereby reducing spending on everything else and making the economy trend toward recession; or the oil price is too low, bankrupting oil producers.

The increasing volatility of prices under conditions of depletion suggests that the free market may not be capable of managing nonrenewable resources in a way that meets everyone’s needs over the long run—especially those of future generations, who don’t have a voice in the discussion. In theory, when a resource that’s in high demand becomes scarce, its price rises to discourage consumption and encourage substitutes. But what if poor people need that resource, too? What if the entire economy depends on ever-expanding cheap supplies of that resource? What if a substitute is hard to find, or fails to match the original resource in versatility or price? What happens to producers if costs of extraction are rising rapidly, but a temporary surge in supply or a fall in demand causes prices to plummet far below production costs? In just the last decade, we’ve seen all of these problems begin to play out in oil markets.

The main alternative to market-based resource extraction and distribution is for governments and communities to collaborate on a program of resource management. We’ve done this with renewable resources by establishing quotas on fishing and by protecting old-growth forests. However, conservation efforts have seldom been undertaken with nonrenewable resources like fossil fuels and minerals. Humanity’s collective plan evidently is to extract and use these resources as quickly as possible, and to hope that economically viable substitutes somehow appear in time to avert economic catastrophe.

In agrarian societies, the most crucial depletion issue is usually the disappearance of soil nutrients—nitrogen, phosphorus, and potassium, which together make soil fertile enough to grow crops. In such societies, soil fertility largely determines the size of the population that can be fed. To avert population declines resulting from famine, successful agrarian societies like the ancient Chinese learned to recycle nutrients using animal and human manures (as we saw in Chapter 3). In contrast, modern industrial societies typically get these nutrients from nonrenewable resources: we mine phosphate from large deposits in China, Morocco, and Florida, and ship it around the world. And we produce artificial nitrogen fertilizers from fossil fuels like natural gas. Since both phosphate deposits and fossil fuel deposits will ultimately be depleted, we will have to find ways to break industrial agriculture’s dependence on these nonrenewable resources if civilization is to become sustainable over the long term.

Meanwhile, our ability to supply plant nutrients artificially has enabled us to largely ignore the depletion of topsoil itself, of which we are losing over 25 billion tons per year globally through erosion and salinization.

In short, the depletion of renewable and nonrenewable resources is a very real problem, and one that probably contributed to the collapse of societies in the past.16 Today we consume resources at a far higher rate than any previous civilization. We can do this mainly due to our reliance on a few particularly useful nonrenewable and depleting resources, namely fossil fuels. Energy from fossil fuels enables us to mine, transform, and transport other resources at very high rates; it also yields synthetic fertilizers to make up for our ongoing depletion of natural soil nutrients. This deep dependency on fossil fuels raises the question of what we will do as the depletion of fossil fuels themselves becomes more of an issue.17

The depletion of resources results from our pursuit of power in the present, but depletion limits humanity’s pursuit of power in the future. We all contribute to resource depletion when we buy goods, use energy, and dispose of trash. The more power we have, the more stuff we can consume. The bigger the scale of our collective human metabolism, and the faster it grows, the more we deplete the environment. We are now using nearly every natural resource faster than nature can regenerate it or technology can substitute for it. And, as a result of exponential growth in extraction rates, half of all the fossil fuels and many other industrial resources that have ever been extracted have been consumed in just the past half-century.18

If we continue on our current course, we are headed toward a crash from which there can be no industrial recovery, because the raw materials needed for operating an industrial economy will be mostly gone—either burned or dispersed across the planet in forms and amounts that would make collection and reprocessing uneconomic in any realistic future scenario.19

Again, we face a trade-off between power and sustainability—in this case, the ability to sustain our access to supplies of resources. It would appear that the only sane basis for an economy would be to use mainly renewable material resources, always at rates equal to or below their rates of natural replenishment, and to use nonrenewable resources only as they can be fully recycled.

We are very far from that condition now. And we are generally moving away from it rather than toward it (i.e., rates of nonrenewable resource use are still growing). Efforts to create a “circular economy” (one in which all materials are reused or recycled) represent a step in the right direction, but are destined to be woefully inadequate if we refuse to dial back total levels of consumption.

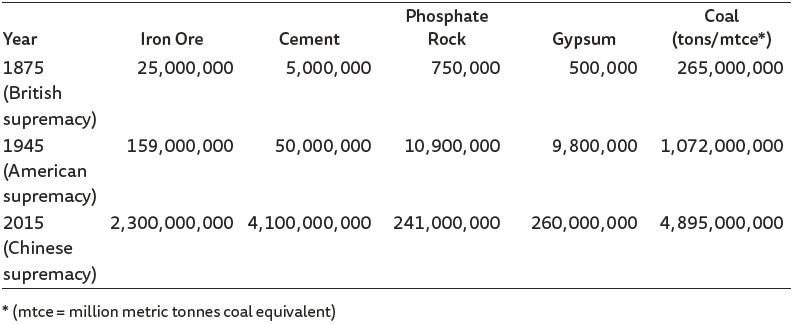

Figure 5.2 Global extraction/production of resources in 1875, 1945, and 2015 (metric tons).

Credit: Christopher Clugston, Blip: Humanity’s 300 Year Self-Terminating Experiment with Industrialism, (St. Petersburg, FL: Book Locker, 2019), p. 232.

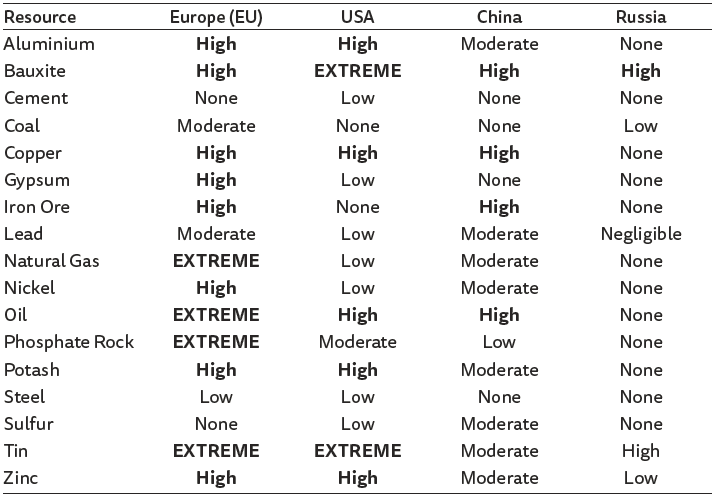

Figure 5.3 Power Center import vulnerability ratings of nonrenewable resources.

Credit: Christopher Clugston, Blip: Humanity’s 300 Year Self-Terminating Experiment with Industrialism, (St. Petersburg, FL: Book Locker, 2019), pp. 274-275.

Soaring Economic Inequality

Economic inequality is typically framed as a problem of distribution of power; however, a little thought reveals that this way of looking at inequality, while valid up to a point, is also misleading.

As more wealth flows through a society, the members of that society will tend to become more unequal, until or unless efforts are undertaken to limit inequality by redistributing wealth, or until a political or social crisis forces redistribution. Once wealth inequalities exist, those with the most wealth will have an advantage which they can use in order to gain even more of an advantage. Anyone who has played the game Monopoly a few times knows that, while a wealth advantage may be hard to establish at first, such an advantage will allow you to rake in more and more income. Then suddenly you own everything. In society, too, wealth begets even more wealth. Since wealth is a key form of social power, this means inequality is at least partly a result of too much power.

Sometimes wealth accumulation by elites ends in revolution; other times it simply leads eventually to the failure of the overall economy. Either way, it seldom ends well. Walter Scheidel’s The Great Leveler (2017) is one of the most thorough studies to date of economic inequality in word history. Sadly, Scheidel finds that inequality has seldom been substantially reduced through peaceful means. Rather, the most sweeping leveling events resulted from four different kinds of powerful shocks to society. Scheidel calls these the “Four Horsemen” of leveling—mass-mobilization warfare (which we touched on in Chapter 4), transformative revolution, state failure, and lethal pandemics.

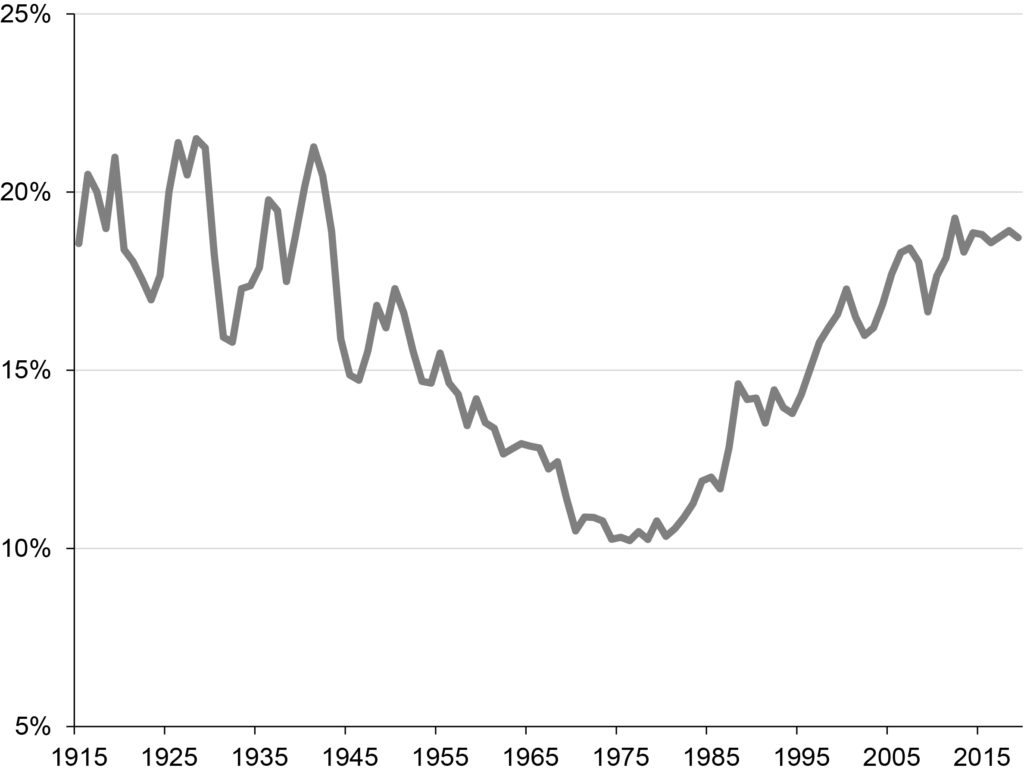

Economic historians (including Scheidel, Thomas Piketty, and Peter Turchin) have begun tracking inequality in terms of the proportion of income and wealth concentrated in the top one percent of society. US wealth inequality, measured this way, reached a high level at the turn of the 20th century; Turchin attributes this to elites’ disproportionate capture of increasing overall national wealth flowing from industrialization and trade.20 Then, following the Progressive-era reforms, as well as New Deal programs (including more steeply progressive taxation) created during the Great Depression, inequality declined until the mid-1970s. Scheidel argues that this period of economic leveling was largely due to the two World Wars, which drove governmental redistributive reforms. Turchin and Scheidel agree that, from the 1970s until the present, inequality has reasserted itself—partly due to Reagan-era tax cuts for the wealthy, and partly due to changes in the financial system (which we’ll discuss shortly).21

Sidebar 22: Inequality in Economic and Political Power in the US, and Its Consequences

The Gini index shows income distribution within a nation; the higher the Gini number, the greater the inequality. The Gini numbers for many nations, including the United States and China, have been increasing in recent years (during this period, the world’s poorest peoples and nations have seen only marginal improvements, at best, in per capita wealth and income). Most political and social scientists say this is a dangerous situation because very high levels of inequality erode the legitimacy of formal and informal governance—including both elected leaders and economic institutions. As wealth and income inequality grow, imbalances in political power tend to follow. For example, a recent study by sociologists Martin Gilens and Benjamin Page found that the policy preferences of the bottom 90 percent of wealth holders in the US are consistently ignored by lawmakers in deference to the desires of the top ten percent.25

Throughout the past century, industrial nations have used progressive taxation—as well as public spending on health care, pensions, and unemployment insurance—as ways of restraining the tendency for wealth to become concentrated in ever-fewer hands (we’ll discuss these strategies at more length in Chapter 6). These policies have certainly helped, but they clearly weren’t full solutions to the inequality dilemma, in light of the recent trend toward greater concentration of wealth. In the US, new data shows that the income of the top one percent of earners has grown 100 times faster than the bottom 50 percent since 1970. Today, just eight men together enjoy as great a share of the world’s wealth as the poorer half of humanity—over 3.5 billion people. It is almost certain that this degree of inequality has never existed before.26

To gain a historical and systemic perspective on this issue, it’s helpful to understand wealth inequality in terms of the commons—the cultural and natural resources that are accessible to all members of a society, and not privately owned. In most pre-industrial economies, the commons included sources of food as well as natural materials for making tools and building shelters. People with little or no money could still subsist on commonly held and managed resources, and everyone who used common lands had a stake in preserving them for the next generation. During and especially after the Middle Ages in Britain and then the rest of Europe, common lands were gradually enclosed with fences and claimed as private property by people who were powerful enough to be able to defend this exclusionary appropriation, using laws and force of arms. The result was that people who would otherwise have been able to get by without money now had to buy or rent access to basic necessities. Again, the rich got richer, while the poor fell further behind.

The school of economics known as neoliberalism prescribes privatization (i.e., further exclusion) as the cure to nearly every economic ill. Thus, the past half-century—during which neoliberal economic ideas have prevailed throughout most of the world—has seen massive privatization of natural resources, industries, and institutions in the United States, in the United Kingdom, in the former Soviet Union and its Eastern Bloc of nations, and in poor, less-industrialized nations that supply resources to industrial economies.

Another cause of the recent increase in world (and US) wealth and income inequality is a process that’s come to be known as financialization. If the charging of interest on loans tends to pump money upward within the social pyramid, then it stands to reason that a significant expansion of total debt, kinds of debt, and ways of creating and managing debt would serve to accelerate the wealth pump. In recent decades, networked computers and global communications have made it possible to make money on trading and insuring many new kinds of financial investments. As a result, those who spend their lives chasing financial profit have seen their wealth and income rise much faster, on average, than those who work for an hourly wage or yearly salary.

For the past few generations, most people have been living under the assumption that, if we just increase the size of the pie (that is, increase overall wealth as measured by GDP), then inequality will be less of a problem: each person will have a bigger slice, even if a few get portions so huge that no individual could possibly consume so much. But that assumption has been shown to be false: we’re reaching limits to the expansion of the pie (i.e., economic growth is stagnating or reversing), and inequality is burgeoning to levels such that increasing numbers of people are questioning the legitimacy of a system that benefits elites so much and so unfairly.

If wealth is power, and if inequality is a problem of too much power, then there is really only one peaceful solution to the problem of growing inequality: reduce the wealth of those who have the most. Ultimately that means reducing the wealth-power not just of billionaires, but also of rich countries relative to poor ones. We must all aim for a relatively simple life that’s attainable by everyone, and that’s able to be sustained without harming ecosystems.

History, in Walter Scheidel’s view, is not encouraging in this regard. While he acknowledges the leveling effects of unionization, progressive taxation, and government redistributive programs, he sees these as historically dwarfed by the impacts of the Four Horsemen mentioned above. Nevertheless, policy-based leveling helps at the margins, and we would do well to anticipate the next major leveling event that’s beyond our control—which, as we’ll see later in this chapter, could take the form of a debt-deflation financial crisis.

Figure 5.4 United States top one percent income share (pre-tax).

Credit: World Inequality Database.

Pollution

In nature, waste from one organism is food for another. However, that synergy sometimes breaks down and waste becomes poison. For example, about 2.5 billion years ago cyanobacteria began to proliferate; because they performed photosynthesis, they gave off oxygen—far more of it than the environment could absorb. This huge, rapid influx of oxygen to the atmosphere was toxic to anaerobic organisms, and the result was a mass extinction event. Clearly, humans aren’t the only possible source of pollution.

However, these days the vast majority of environmental pollution does come from human activities. That’s because we humans are able to use energy and tools to extract, transform, use, and discard ever-larger quantities of a growing array of natural substances, producing wastes of many kinds and in enormous quantities.

The social power issues related to pollution can often be untangled simply by identifying who is polluting, and who is on the receiving end of pollution. The powerful tend to benefit directly from economic processes that produce waste, while insulating themselves from noxious byproducts; while the powerless share much less in the economic benefits and tend to be exposed to a much greater extent to toxins. Polluters often count on society as a whole to clean up their messes, while they keep the profits for themselves. Thus, the ability to pollute, without the requirement to fully account for that pollution and its impacts, is itself a form of power.

Nobody sets out to pollute. Pollution is always an unintended byproduct of some process that uses energy and materials for an advantageous purpose. Humans were already causing pollution in pre-industrial times—for example, when mining tin or lead, or when tanning leather near streams or rivers. Today, however, with much higher population levels, and with much higher per capita rates of resource usage, examples of environmental pollution are far more numerous, extensive, and ruinous.

Arguably, the modern environmental movement began with the publication of Rachel Carson’s Silent Spring (1962), which warned the public about the invisible dangers of new synthetic pesticides, such as DDT. These chemicals were hailed as giving humanity the power to control insect pests, which regularly decimated crops, and disease microbes that spread death and misery via illnesses like cholera and malaria. The benefits of pesticides were undeniable—but so were the unintended side effects. DDT accumulated in animal tissues and worked its way up the food chain; it caused cancer in humans and was even more harmful to bird populations. Carson’s book led to the banning of DDT in the United States and changed public attitudes about pesticides generally. Many other pesticide regulations followed. Yet, despite these successes, the overall global load of pollutants has continued to worsen, and new pesticides—often inadequately tested—have replaced DDT.

The pesticides most widely used today are collectively known as neonicotinoids; they are being blamed both for widespread crashes in the populations of bees and other insects, and for serious impacts to aquatic ecosystems worldwide.27 Multiple studies have linked bee colony collapse disorder—which has decimated bee numbers in North America and Europe—with neonicotinoids; some of the latest studies suggest the pesticides kill colonies slowly over time, with queen bees being particularly affected.28

Like pesticides, artificial ammonia-based fertilizers were welcomed as a humanitarian boon, dramatically increasing crop yields and staving off famine. But fertilizer runoff from modern farming creates “dead zones” thousands of square miles in extent around the mouths of many rivers. Fertilizer acts as a nutrient to algae, which proliferate and then sink and decompose in the water. The decomposition process consumes oxygen, depleting the amount available to fish and other marine life.

Air pollution from burning coal in China is so thick and hazardous that it causes nearly 5,000 deaths per year (millions more face shortened lives), and the haze sometimes drifts as far as the West Coast of the United States. A combination of firewood, biomass, and coal burning, compounded by population growth, has similarly resulted in deadly and worsening air quality in India. A study based on 2016 data showed that at least 140 million people there breathe air that is polluted ten or more times the World Health Organization’s safe limit. India is home to 13 of the world’s 20 cities with the worst air pollution.

Nuclear power plants supply electricity reliably; but, in rare instances where something goes terribly wrong, persistent radioactive pollution can result. Soil in areas around the melted-down nuclear reactors in Fukushima in Japan and Chernobyl in Ukraine will be radioactive for centuries or millennia. Attempts to stem the Fukushima disaster have produced more than a million tons of contaminated water stored in tanks at the site. As more water accumulates, cleanup managers see no solution but to release radioactive water into the sea.

Plastic packaging offers affordable convenience and protection, especially in the food industry, where it prevents the spoilage of thousands of tons of food annually. However, plastic particles (a large proportion of which originated as food packaging) in the Pacific Ocean have formed giant floating gyres, and it’s been projected that by 2050 the amount of plastic in the oceans will outweigh all the remaining fish.29 What’s more, plastic packaging leaches small amounts of organic chemicals, some known to cause cancer, into the foods they protect. Many of these chemicals are known to mimic the action of hormones, and are believed to contribute to diabetes, obesity, and falling male sperm counts as well as other fertility problems, both in humans and other animals.

Another endocrine system-disrupting class of chemicals is known as PFAS (polyfluoroalkyl substances), used, for example, in making Teflon for non-stick cookware.30 These chemicals have been spread globally (they’ve been detected, for example, in Arctic polar bears and fish in South Carolina), and are estimated to be in the blood of over 98 percent of Americans.

A 2013 report by the UN Environment Programme and World Health Organization noted that endocrine diseases and disorders are on the rise globally, including cancers, obesity, genital malformations, premature babies, and neurobehavioral problems in children. Total incidences of human cancers are increasing at between one and three percent annually worldwide, according to some estimates.31

Immune system impacts, reproductive system impacts, and impacts on wildlife are all tied to chemicals that disperse in the environment without breaking down. At least 40,000 industrially produced chemicals are now present in the environment. Few are specifically regulated, and of those that are, regulation started long after their introduction. The initial burden is on those impacted to prove chemicals harmful, not on industry to prove them safe.

It is possible in some instances to reduce pollution to relatively harmless levels without having to forgo economic benefits, just by substituting safer alternatives for existing chemicals, fuels, and industrial processes. For example, many companies are developing and using bioplastics to replace plastics made from petrochemicals. However, it will take decades to identify and develop those safer alternatives in all instances; and, in many cases, alternatives may be more expensive or less functional. In the meantime, the only way to ensure human and ecosystem health is simply to stop using polluting chemicals, fuels, and processes. That means giving up the power to control our environment in many ways we currently do.

Overpopulation and Overconsumption

It’s often said that there is safety in numbers. But there is also power in numbers: a larger population size can sometimes translate to a bigger economy, a larger voter base, or a more formidable army—all of which confer advantages to nations or sub-national groups. In pursuit of such advantages, societies, and groups within societies, have long sought ways to increase their numbers.

As we saw in Chapter 3, the adoption of agriculture was likely both a result of crowding, and a facilitator of further population growth. But overall that growth was typically slow and uneven, punctuated by famines and plagues.

Big God religions, discussed in Chapter 4, based part of their success on their ability to convince their adherents to have more children, thereby out-competing rival groups. For example, the Bible enjoins believers to “be fruitful and multiply,” and census records today show that Christians, Muslims, Hindus, and Jews still outbreed the religiously unaffiliated.32 The Mormon Church likewise urges believers to have large families, and in just 170 years it has grown from a few hundred followers to 15 million globally. While missionary activities and conversion played a significant role in this expansion, high fertility has been responsible for a persistent, underlying growth trend for Mormonism. “It is no accident,” writes Ara Norenzayan in Big Gods, “that religious conservative attitudes on women’s rights, contraception, abortion, and sexual orientation are conducive to maintaining high fertility levels.”33

While agriculture enabled larger population sizes, and Big God religions promoted higher fertility among followers, for the past couple of millennia actual rates of world population growth were typically a tiny fraction of one percent annually. Still, simple arithmetic shows that even slow rates of increase, if compounded, can eventually lead to very large numbers. At the start of the 19th century, English cleric and scholar Thomas Malthus wrote An Essay on the Principle of Population, in which he hypothesized that any improvements in food production, rather than resulting in higher living standards, would instead ultimately be outpaced by population growth, since “the power of population is indefinitely greater than the power in the earth to produce subsistence for man.”34 Malthus, a deeply conservative thinker, wrote his essay to counter the utopian expectations of writers like William Godwin, who believed that progress would lead to a future human society characterized by freedom and abundance.

Throughout the two centuries since Malthus, evidence has consistently contradicted his dire warnings, and his name is now evoked to dismiss any and all long-range pessimism regarding population growth and resource availability. As we’ve seen, guano deposits in South America provided fertilizer for farmers in Europe and the Americas in the 19th century; and just as those deposits were exhausted, new synthetic fertilizers made from fossil fuels became available. Together with tractors, new farming techniques, other soil amendments, and new synthetic pesticides, ammonia fertilizers enabled a dramatic increase in farm yields. Millions of acres of forest were cleared to make room for more farms. At the same time, better sanitation and new disease-fighting drugs lowered death rates. As a result, while population has grown roughly eight-fold since the publication of Malthus’s book, food production has more than kept pace.

Still, the realistic prospect of continuing to expand agricultural yields at the historically recent rate is widely disputed. New farmland can be brought into production only by taking more habitat away from other creatures, thereby exacerbating the biodiversity crisis. Simply applying more fertilizer won’t help much, as the limits of its effectiveness have nearly been reached, and environmental impacts from excessive fertilizer use are soaring.35 Meanwhile current farming practices are resulting in the annual erosion or salinization of tens of billions of tons of topsoil annually worldwide.36 The difficulty of continuing to defeat famine with current methods is at least implied by the recent proliferation of calls for completely new food production methods that don’t require soil or agricultural land.37

World population growth rates in the mid-20th century were probably the highest in all of human history. Between 1955 and 1975, population increased at over 1.8 percent per year, with a peak rate of growth of 2.1 percent occurring between 1965 and 1970. For perspective, recall from Chapter 1 that an annual growth rate of one percent results in a doubling of population size in 70 years; two percent growth doubles population in just 35 years.

The percentage rate of increase is one way to understand population growth; another is to track the actual numbers of humans added each year (births minus deaths). This annual increase peaked at 88 million in 1989, then slowly declined to 73.9 million in 2003, then rose again to 83 million as of 2017—even though the percentage rate of growth had declined to about 1.2 percent. A declining rate of growth can still produce a larger annual increase in human numbers because it’s a smaller percentage of a much bigger number (this is one of the devilish consequences of exponential growth). We’re currently adding about a billion new humans every 12 years.

Generally, wealthier nations have seen a decline in their population growth rates in recent decades, but annual growth rates remain above two percent in many countries of the Middle East, sub-Saharan Africa, South Asia, Southeast Asia, and Latin America. The world growth rate is projected to decline further in the course of the 21st century. However, the United Nations projects that world population will reach more than 11 billion by 2100—with most of that growth occurring in poorer countries where it will be difficult for people to obtain the additional housing and food they will need.

If human numbers were to continue growing at just one percent per year, population would increase to over 115 trillion during the next thousand years. Of course, that’s physically impossible on our small planet. So, one way or another, our population growth will end at some point. The question is, how—through plan or crisis?

Population growth makes every environmental crisis harder to address,38 and contributes to political and economic problems as well (for example, nations with high population growth rates are more likely to have authoritarian governments).39 Reproduction is a private decision, a human right. Yet, quite simply, the more children we have now, the more human suffering is likely to occur later this century. If we don’t find ways to reduce our numbers humanely, nature will find other ways.

Demographics is the statistical study of population, and demographers speak of a “demographic transition,” which describes the tendency for population growth rates to decrease as nations become wealthier. Most policy makers hope to avoid the problems associated with population growth simply by pursuing more economic growth. However, as we have already seen, further economic growth is incompatible with action to halt climate change and resource depletion.

Ecological footprint analysis measures the human impact on Earth’s ecosystems. Our ecological footprint is calculated in terms of the amount of land and sea that would be needed to sustainably yield the energy and materials we consume. According to the Global Footprint Network, at our current numbers and current rate of consumption we humans would need 1.5 Earths’ worth of resources to sustainably supply our current appetites. At the US average amount of consumption, we would need the equivalent of at least four Earths to sustain us. Of course, we don’t have four or even one-and-a-half planets at our disposal—yet we are still in effect using more than one Earth by drawing down resources faster than they can regenerate, thereby reducing the long-term productivity of ecosystems that would otherwise be available to support future generations.

Human impact on the environment results not just from population size, and not just from the per capita rate of consumption, but from both factored together (with further variation in impact depending on the technologies applied to productive and consumptive purposes). Clearly, different countries’ per capita rates of consumption vary greatly: the ecological footprint of the average American is almost 11.5 times as big as that of the average Bangladeshi. On the other hand, Bangladesh has a population density of 1,116 persons per square kilometer, as compared with the US, with a population density of 35 people per square kilometer. Within both nations, there is also a great deal of economic inequality—and thus a vast variation in levels of consumption. Overpopulation is tied to other problems, and solving it won’t automatically solve these others; but ignoring it tends to make related problems worse.

There are many ways to avoid recognizing and confronting the problems of overpopulation and overconsumption. Pointing fingers and insisting that other nations are to blame is one; another is to hold firmly to the illusion that economies can somehow continue to grow without using more energy and materials, thereby providing for more people without further depleting or damaging nature.40 But, once again, a problem ignored is still a problem.

Giving up population growth means giving up a certain kind of power—one that many groups within society currently prize. But, for humanity as a whole, the power of population growth is ultimately self-defeating, and we are approaching or beyond natural limits. If we do not choose to rein in our power of population growth, we will face a series of snowballing crises that will disempower us in ways none of us would choose. (We’ll return to the discussion of population growth and what to do about it in Chapter 7.)

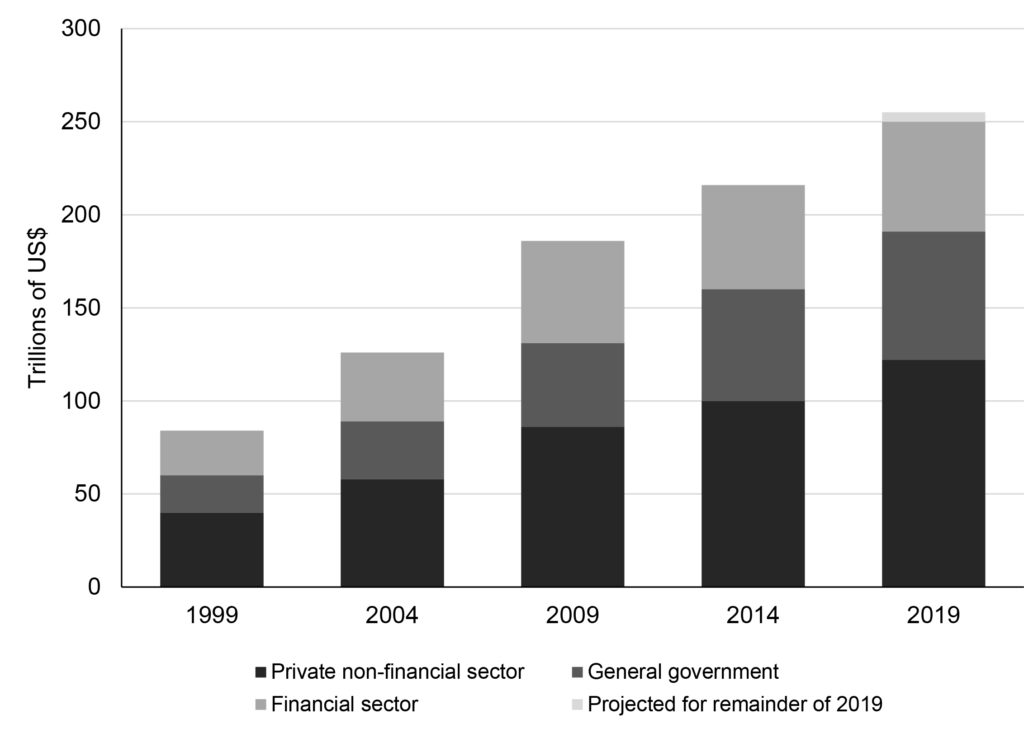

Global Debt Bubble

As we saw in Chapter 3, debt and money are inseparable, and money can be understood as quantifiable, storable, and transferrable social power. Debt has the further characteristic of time-shifting consumption: with it, we can consume (thus enjoying the fruits of power) now, but pay later. This can create problems when “later” finally arrives.

Many individuals, and whole societies, have overindulged in debt throughout history as a way of making life easier for the moment, with only a vague hope of paying it off in the future; or as a way of investing capital with the expectation of earning interest or dividends, but without adequate understanding of the risks involved. As societies pass through periods of optimism and expansion, there is a tendency for people and businesses to take on larger loads of debt. Such episodes of widespread borrowing and lending are sometimes called inflationary bubbles, and they can be based on irrational group psychology. As manias crash on the shoals of reality, debts can be defaulted upon in large numbers, initiating a deflationary depression. Such boom-and-bust cycles can be traced back to the first societies to use money and debt.41

In Chapter 4, we saw how decades of access to cheap, abundant fossil fuels led to an unprecedented expansion of population, production, and consumption—the Great Acceleration. That astonishing period of expansion was marred by temporary financial setbacks—repeated recessions, panics, and depressions (for example, in the US, the recessions of 1882-85 and 1913-14; the panics of 1893, 1896, 1907, and 1910; and the depressions 1873-79 and 1920-21, capped of course by the Great Depression of 1929-41). The last of these, especially, along with the two World Wars, led to a series of general overhauls of the global monetary and financial systems.

For many centuries, gold and silver had served as anchors to national monetary systems and international trade: while paper deposit receipts for precious metals could be issued in excess of actual metal holdings (usually by banks or governments) and used as money, the knowledge that an eventual accounting or reckoning could occur, requiring the surrendering of actual gold or silver, usually kept such inflationary temptations in check. Instances of hyperinflation were relatively rare (one notable exception was the French hyperinflation of 1789–1796).

However, by the late 19th century, it was clear that the world had entered a new era of abundant energy, technological innovation, expanding industrial production, scientific discovery, and financial opportunity. We’ve already seen (in Chapter 4) how this led to a crisis of overproduction, which was largely solved with expansions of advertising and consumer credit. But the Great Acceleration also posed a monetary problem: there was simply not enough gold and silver money in existence to facilitate all the investment and spending that were otherwise possible. The solution consisted of a gradual retreat from the use of precious metals and an increasing reliance on fiat currencies (i.e., currencies not backed by any physical objects or substances) that could be called into existence by banks. The rules for the creation of money were set forth by new central banks (including the US Federal Reserve Bank, created in 1913), which were themselves authorized by national governments. Governments could also create money by issuing bonds (i.e., certificates promising to repay borrowed money at a fixed rate of interest at a specified time)—a means of deficit spending.

Anticipating growth in tax revenues, and wishing to fund public services and military expansion, governments tended to overspend and thereby to become over-indebted. Nevertheless, as economies snowballed during the Great Acceleration, government debt came to be normalized. Consumer credit and business debt also ballooned, and were also accepted as innocuous facilitators of growth.

The result was, of course, a steep increase in overall debt. This could be measured in several ways. First was simply in terms of the number of units of a given national currency. But large increases in debt and spending tended to lead to inflation, diluting the actual purchasing power of the currency. Debt could also be translated to its purchasing-power equivalent. Finally, government debt could be measured in terms of the debt-to-GDP ratio (too much debt per unit of GDP has historically led to crises in which governments have been unable to make payments on debt; that in turn has led to financial panics in which large numbers of people suddenly wished to sell stocks and bonds, thus dramatically lowering their value). This ratio has fluctuated during the modern industrial era, for the US and the world as a whole, but in recent years the ratio has grown significantly, even while GDP has been increasing.