Power in the Holocene: The Rise of Social Inequality

Want to see Richard’s video commentary for this chapter (and get access to webinars too)? Upgrade your Power package.

Competition within groups destroys cooperation; competition between groups creates cooperation.

― Peter Turchin, Ultra Society

Being powerful is like being a lady. If you have to tell people you are, you aren’t.

― Margaret Thatcher

All governments suffer a recurring problem: Power attracts pathological personalities. It is not that power corrupts but that it is magnetic to the corruptible.

― Frank Herbert

Political power is built on physical power.

—Richard Wrangham and Dale Peterson, Demonic Males

While Chapter 2 mostly explored humans’ early development of physical powers, this chapter focuses more on social power. If physical power is the ability to do something, social power is, as I have already suggested, the ability to get other people to do something. The invention of language, discussed in the previous chapter, was the ultimate source of most of our uniquely human social power. But social power has evolved since then, in dramatic and often disturbing ways.

As mentioned at the end of the last chapter, a significant shift in human social evolution started roughly 11,000 years ago, probably set in motion by yet another change in the global climate. The Pleistocene epoch had begun 2.6 million years previously and had featured 11 major glacial periods punctuated by occasional warm intervals. Now glaciers over much of North America and Eurasia melted, ushering in the Holocene epoch, during which all of recorded human history would unfold.

Sidebar 9: Measures of Social Power

Gardening, Big Men, and Chiefs: Power from Food Production

One might assume that the end of an ice age must have made life easier for humanity. In some places, that was no doubt the case, especially as the warmer climate stabilized. But warming was not gradual and uniform; it occurred in fits and starts. The shift from a colder climate to a warmer one resulted in rapidly rising sea levels, as well as localized floods alternating with dry conditions. Many animals went extinct during this period, and it appears that not all of them were victims of humans’ improving hunting skills. Times must have been tough not just for these animals, but for at least some humans as well.

All human species other than sapiens were now gone. For countless millennia, everyone had subsisted by hunting and gathering, a way of life characterized by horizontal power relations: all adults in a given group generally had a say in organizing whatever work was done. But as climatic and ecological conditions changed, human societies were rapidly adapting to a variety of new niches. With the dawn of the Holocene, people started finding new ways of obtaining their food; and these innovations in food production would gradually lead to the development of vertical social power, in which an individual or small group determined what would be produced and how, and to whose benefit.

If the shift from scavenging, hunting, and gathering to food production was invited partly by the opportunity to stay in one spot and enjoy a seasonal surplus, it was also mandated by necessity. At this point, all human groups had language and tools. Therefore, their ways of life were malleable and capable of adapting rapidly via cultural evolution. Adaptation was now needed, not only because of shifting environmental circumstances, but also because Homo sapiens were just about everywhere. Africa, Eurasia, and Australia had been peopled for many thousands of years, and North America and South America were rapidly becoming populated as well.1 Only the Pacific islands would remain free of humans for another few thousand years, awaiting the development of better navigation skills. Competition for living space was increasing. And the evidence suggests that levels of between-group violence were increasing too, as groups with adjacent or overlapping hunting territories saw herds of prey animals thinning.

Intensifying warfare meant that people had to do something or risk losing everything. The evidence suggests they did two things: they started living in larger groups (so as to better resist raids, or to more effectively carry them out); and, in order to support their larger group size, they began to grow food.

The first permanent settlements were likely located in ecologically rich and diverse wetlands, where people could continue to hunt and gather wild foods while also domesticating grains and useful animals. The earliest evidence of this settled way of life—dating from roughly 8,500 years ago—has been found in the Tigris-Euphrates region, where many tiny Mesopotamian kingdoms would later flourish. It would take another 4,000 years before sedentism and field crops would lead to the beginning of what might be called civilization.

Part of the motive for settling down had to do with the opportunity provided by geography. These centers of early settlement were, after all, regions of great ecological abundance. But that made them desirable to many people. As population densities grew, so did conflict. People had to defend their territories as they increasingly tended to stay in one place. Gradually, more food calories came from planting, fewer from hunting. People had previously figured out the basic life cycle of food plants, but they’d had insufficient motive to settle down to the hard and unhealthy life of planting and tending crops. Increasingly, that motive was inescapable.

As communities grew in size, keeping them socially unified required occasional large-scale meetings and rituals. Suddenly in the early Holocene—at first in what is now Turkey, but later throughout Europe and the British Isles—we see examples of monumental architecture that seem to mark ceremonial centers where thousands gathered seasonally. People’s food, work, political organization, and spirituality were all being transformed.

Anthropologist Marvin Harris was one of the first scientists to grasp the key relationships between how people get their food and how they organize themselves and make sense of the world. He observed that all societies operate in three realms. First is the infrastructure, which consists of the group’s ways of obtaining necessary food, energy, and materials from nature. Second is the structure, consisting of decision-making and resource-allocating activities—the group’s political and economic relations. Finally, there is the superstructure, which is made up of the ideas, rituals, ethics, and myths that enable the group to explain the universe and coordinate individuals’ behavior.

Change in any one of these three realms can affect the other two: the emergence of a new religion or a political revolution, for example, can alter people’s material lives in significant ways. However, observation and analysis of hundreds of societies shows that the way people get their food is a reliable predictor of most of the rest of their social forms—their decision-making and child-rearing customs, their spiritual practices, and so on. That’s why anthropologists commonly speak of hunter-gatherer societies, simple and complex horticultural societies, agricultural societies, and herding societies. Harris called his insight “infrastructural determinism”; we might simply say, food shapes culture.2

The transition to agriculture had many stages and jumping-off points. While some hunter-gatherer societies with permanent settlements, stone houses, social complexity, and trade were appearing in alluvial plains—at first in the Middle East, but later in China, India, and the Americas—others remained small and simple. Archaeologists are still piecing together the evidence of when and how planting and harvesting emerged.3

It’s clear, though, that humanity’s first major innovation in food production consisted of planting small gardens, typically using the same patch of land for several years until the soil became depleted, then moving on. That patch might be left fallow for 20 or 30 years before being cultivated again. Hunting still supplied some nutrition, as did fishing in the case of groups living near sea coasts, lakes, or rivers. Increasingly, people lived in villages, but these were small and movable. Anthropologists call groups who live this way “simple horticultural societies.”

Gardening enabled the production of a seasonal surplus, which could be stored in preparation for winter or as a hedge in case of a poor harvest next year. But production of a significant surplus required that people work harder than they otherwise needed to just to satisfy their immediate needs. How to unify everyone’s resolve to work harder than was absolutely necessary? Societies in many parts of the world came up with the same solution: the Big Man. Within the group, one individual (nearly always male, according to the available evidence) would set an example, working hard and encouraging his relatives and friends to do so as well. The payoff for all this toil would come in the form of huge parties, thrown occasionally by the Big Man and his crew.

Being a Big Man led to influence and prestige: people listened to you and respected you, and you could represent your group at inter-group ceremonies, where you competed for status with other regional Big Men. But getting to be a Big Man required that you give away nearly all of your wealth every year; moreover, other males in your group were always aspiring to be Big Men, too, so there was never any assurance that you could maintain your status for many years or pass it along to your sons. And a Big Man couldn’t force others to do anything against their will. Even though one individual had acquired status, at least temporarily, society was still highly egalitarian.

It sounds like a pretty good way to live. However, in places that were becoming more densely populated, horticultural societies engaged increasingly in warfare—as surviving horticultural societies have continued to do right into modern times. Take for example, the Mae Enga, a Big Man horticultural society in New Guinea that was researched extensively in the 1950s and ’60s. New Guinea is, of course, an island, and by the time anthropologists arrived it already hosted as many people as could be supported by the prevailing means of food production.4

The Mae Enga then numbered about 30,000, living at a density of 85 to 250 people per square mile. The society as a whole was divided into tribes, and those were subdivided into clans of 300-400 people. Pairs of clans often fought with one another over gardening space, and the intensity of warfare was high, with up to 35 percent of men dying not of old age, but of battle-inflicted wounds. Losing a war might mean having to abandon your territory and disperse to live among other clans or tribes.

The Mae Enga, like many other societies that lived in groups closely packed together, all subsisting in nearly identical ways, but separated by seas, mountains, or forests from the rest of the world, continued to pursue the simple horticultural way of life for millennia. However, in some less naturally bounded areas, another shift in food production and social organization occurred, starting roughly 8,000 years ago. With increasingly frequent raids and other conflicts, communities began to band together into even larger units in order to protect themselves or to increase their collective power vis-à-vis other groups. Living now in big, permanent villages, and with population still growing, people had to find ways to further intensify their food production. While still using simple digging sticks for cultivation, they more often kept domesticated animals; meanwhile, they left land fallow for shorter periods, five years or fewer, and sometimes fertilized it with human or animal manure. Many of these groups employed terracing and irrigation as well.

As these transformations were taking place, the role of Big Man gave way to that of chief. Since warfare was becoming more intense and frequent, leadership took on more authoritarian qualities. Many societies designated different chiefs for peace and wartime, with differing kinds and levels of authority. But gradually, in many societies, war chiefs assumed office on a permanent basis. Chiefs often had the authority to commandeer food and other resources from the populace, and in some cases were able to keep an unequal amount of wealth for themselves and their families. Over time, their position became hereditary. Also, an increasing portion of production was directed toward exchange rather than immediate consumption, as trade with other groups expanded.

The Cherokee of the Tennessee River Valley, prior to European invasion and conquest, were a fairly typical chiefdom society. They traced their kinship via the mother, and women owned their houses and fields. Each family maintained a crib for maize to be collected by the group’s chief, who maintained a large granary in case of a poor yield or for use during wartime. The chiefs of the seven Cherokee clans convened in two councils, where meetings were open to everyone, including women. The chiefs of the first council were hereditary and priestly, and led religious activities for healing, purification, and prayer. The second council, made up of younger men, was responsible for warfare, which the Cherokee regarded as a polluting activity. After a war, warriors needed to be purified by the elders before returning to normal village life.

Anthropologists call chiefdoms like this “complex horticultural societies.” Compared to simple horticulture organized by Big Men, complex horticulture required working even harder. But, by this time, many regions had become so densely populated that it was impossible for people to return entirely to the easier life of hunting and gathering or even simple horticulture, and, in many cases, there was little choice but to continue intensifying food production.

From the standpoint of social power, the advent of chiefs represented a significant development. For the first time in the human story, a few individuals were able to commandeer more wealth than anyone else around them, and able also to pass their privileges on to their hereditary successors. Social power was becoming vertical. But a far more fateful shift would occur around 6,000 years ago: it culminated in the formation of the first states, which produced food via field crops and plows (i.e., through agriculture rather than horticulture), and which were ruled by divine kings.

Plow and Plunder: Kings and the First States

Cultural evolution theorists describe the process we’ve been tracing, in which competition between societies drove intensifying food production and population growth, by way of a controversial idea: group (or multilevel) selection. Whereas evolution typically works at the level of individual organisms, group selection kicks in when a species becomes ultrasocial. Then, whenever variations arise between groups within the species, competition between the groups selects for the fittest group. Some evolutionary theorists, such as Steven Pinker, resist the notion of group selection, arguing that new traits in highly social species can still be accounted for with the tried-and-true concept of individual natural selection. But, in my view, when seen through the lens of group selection, the development of human cultures through the millennia becomes far easier to understand.

Three ingredients are necessary for the engine of group selection to rev up: there must already be high levels of intragroup cooperation; there must be sources of variation within groups (new behaviors, tools, institutions, and so on); and there must be sources of competition between groups. In the early Holocene, human societies in several regions had all three ingredients in place. These were places where large numbers of human groups already existed, jostling against one another’s boundaries. All of these groups were themselves the products of earlier cultural evolution that had led to the development of language and high levels of intragroup cooperation. Cultural variations were present, perhaps having appeared in further-flung regions, then migrating to these edges of territorial overlap, in which resources were relatively abundant. Competition inevitably arose over access to these resources, often leading to war. The result was even more cooperation within societies, and the tendency for societies to become larger, more technologically formidable, and more internally complex so as to more successfully compete against their neighbors.

According to the latest thinking among scientists like Peter Turchin, who specialize in studying cultural evolution via group selection, shifts in food production and social organization were motivated primarily by population growth and warfare.5 Continually vulnerable to raids from neighboring groups, communities had little choice but to find ways of increasing their scale of cooperation. That meant building alliances or absorbing other groups. But as societies became larger, their governance structures had to evolve. When it became no longer possible to know everyone else in your group and to make collective decisions face-to-face, a form of rigid hierarchy tended to spring up. This eventually took the form of the archaic state.

All of this took time. Between four and six millennia would elapse between the appearance of societies relying on gardening, using simple digging sticks, and the advent of early agricultural states depending on field crops and plows. The latter would constitute a milestone in cultural evolution. States arose independently in several places, and at somewhat different times: around 6,000 years ago in Mesopotamia and southwestern Iran; around 2,000 years ago in China, the Andes, Mesoamerica, and South Asia; and 1,000 years ago in West Africa. The state was a social innovation on five levels—political, social-demographic, technological, military, and economic—and it could concentrate far more power than simple and complex horticultural societies.

Politically, the state was defined by the advent of kings. With increasing levels of between-group conflict, war chiefs had begun not only to remain in office permanently, but also to assume the ceremonial duties and religious authority of the former peace chiefs. Gradually, they became hereditary monarchs with ultimate, divinely justified coercive power over all members of the group. The king no longer simply had the duty to maintain customs, but could make laws, which were enforced under threat of violence exercised by officers of the state on the king’s behalf. Those who broke the law, however arbitrary that law might be, were, by definition, criminals. The king claimed ultimate ownership of his entire governed territory, and also claimed to be the embodiment of the high god; divine privileges extended also to his family.

Socially and demographically, the state came to be defined by its territorial boundary. Successful groups were able to defend larger and more stable geographic perimeters, and began to identify themselves as much by place as by language or other cultural traits. The state gradually became multi-ethnic and multilingual.

As societies grew in population size, increasingly incorporating members absorbed from other groups, kinship-based informal institutions for meeting people’s material, social, and spiritual needs no longer worked well. This helped drive the evolution of royal law as a substitute for tradition and custom in regulating behavior.

In addition, the agricultural state was characterized by full-time division of labor. The great majority (often 90 percent or more) of the populace were peasants working the land, or captive slaves working in mines, quarrying stone, felling timber, dredging, rowing ships, and engaging in similar sorts of forced drudgery. The rest of the population at first consisted of the royal family, their attendants, priests, and full-time soldiers. Gradually, the professional classes expanded to include full-time scribes, accountants, lawyers, merchants, craftspeople of various kinds, and more.

Finally, agricultural states were defined demographically by the existence of cities—permanent communities with streets, grand public buildings usually made of stone, and a high density of occupants numbering in the hundreds or thousands. Only a minority of the population lived within cities, but cities were the political, commercial, ceremonial, and economic hubs of the state. Food was produced as close to the city as possible, so state territories initially often comprised only a few square kilometers.

Technologically, the agrarian state depended on one innovation above all others—the plow, which enabled the planting of field crops, typically grains such as wheat, barley, maize, or rice. Working hard, a farming family could produce a surplus of grain that the king claimed on behalf of the state as a tax. In return, the state provided protection from raids and also held grain in storage in case of poor harvests.

States also competed with each other to develop more effective weapons—from more powerful bows and sharper and stronger swords to armor. Competition for better weapons in turn drove innovations in metallurgy—leading to the adoption first of copper, then of bronze, and finally of iron blades and other tools.

Militarily, states benefited (vis-à-vis other human groups) by employing full-time specialists in violence—i.e., soldiers, as well as full-time specialists in motivating, managing, and strategically deploying soldiers, and full-time specialists in making and refining weaponry. The development of organized military power occurred by trial and error, and entailed many setbacks. Disciplined, highly motivated, and well-equipped soldiers were formidable on the battlefield, but smaller forces could still prevail if they relied on the element of surprise (guerilla-style warfare has continued to be effective up to the present). The net result was a continuous arms race and frequent, deadly warfare.

Economically, the state functioned as a wealth pump, with surplus production from the peasantry continually being funneled via taxes upward to the king, the king’s family, the priesthood, and the aristocracy—which at first consisted primarily of elite soldiers and their families. Like taxes on the peasants, increasing trade (mostly of luxury goods) primarily benefited the upper classes. The operation of the wealth pump, over time, tended to generate social cycles: as elites captured an ever-larger share of the society’s overall wealth, peasants became increasingly miserable. Meanwhile, the number of elites and elite aspirants tended to grow until they all could not enjoy the privileges they wanted, thus leading to competition among elites. The society would become unstable, a condition that tended to persist and worsen before culminating in civil war or bloody coup. Periods of internal strife thus alternated with times of consolidation and external conquest.

In addition, the idea and practice of land ownership—unknown previously—became an essential means of organizing relations between families, and between people and the state. Ownership demanded an entirely new way of thinking about the world, one in which sympathies with nature would recede and numerical calculation would play an increasing role.

It is no accident that all early autocratic states adopted grains (primarily wheat, barley, millet, rice, and maize) as their main food sources. Grains have a unique capacity to act as a concentrated store of food energy that is easily collected, measured, and taxed. Grains’ taxability was especially consequential: without taxation, it’s likely that the wealth pump could not have emerged, nor could centralized, vertical-power government.

Innovations from any one of these five realms (political, social-demographic, technological, military, and economic) could spur innovations in other realms. For example, full-time division of labor tended to stoke technological change, as professionals had the resources with which to experiment and invent, refining weapons and farm tools. New weapons enabled the growth of military power. And new technologies also contributed to economic change by generating new professions and new sources of wealth.

Meanwhile, innovations in one kingdom were quickly adopted by neighboring ones, as states continually competed for territory. Failure to keep up might doom one’s group to conquest, captivity, or dispersal. Cultural evolution had many ingredients, but war was its main catalyst.

Environmental impacts were a source of instability. Previously, people had often been able to escape undesirable environmental consequences simply by moving (recall that horticultural societies left their fields fallow for many years before returning to cultivate the same spot). But farmers living in agricultural states had no option but to stay put. Plows loosened and pulverized the soil surface and enabled the farmer to easily remove weeds. Over the short term, this boosted crop yields; however, over the longer term, it led to erosion. Also, irrigation led to salt buildup in soils (as it still does today). Meanwhile, the cutting of trees to build cities depleted local forests. As a result of these practices, the cradle of civilization, known as the “fertile crescent” of the Middle East, is now mostly desert. Ecological ruin was common in other centers of civilization as well.

The earliest civilizations (a term derived from the Latin civis, meaning “city”) sometimes enjoyed unusually favorable natural environmental conditions. Notably, Egypt benefitted from the annual overflow of the Nile, which replenished topsoil for farmers. As a result, even though Egypt had its political ups and downs, it never succumbed to the same degree of ecological devastation that Mesopotamia eventually did. Gradually, relatively sustainable farming practices emerged here and there, such as the Chinese systematic use of human and animal manure to maintain soil fertility, or the Native American practice of intercropping (planting nitrogen-fixing beans together with squash and maize).

For the first few centuries of their existence, states had art and architecture but no writing. Because they left no histories, we have limited insight into how people in these societies thought and how they treated one another; what we do know comes from excavating their settlements and cataloging their more durable weapons and ornaments. These signs point to the brutality of rigid hierarchies, in which kings ruled with absolute power. Slavery was universally practiced, and one of the main incentives to warfare was the capture of men, women, and children for purposes of forced labor and breeding. Signs of widespread human sacrifice are unmistakable. The world had never before, and has rarely since, seen societies that were so starkly unequal and tyrannical. Indeed, the great empires of Greece, India, Rome, Persia, and China that most of us remember from the early pages of high-school history books were places of moderation and freedom by comparison. The earliest surviving texts from archaic kingdoms convey the distinctive flavor of despotism. Here’s an excerpt from a cuneiform text found by archaeologists in Ashur, the capital city of the Assyrian Empire:

I am Tiglath Pileser the powerful king, the supreme King of Lashanan; King of the four regions, King of all Kings, Lord of Lords, the supreme Monarch of all Monarchs, the illustrious chief who under the auspices of the Sun god, being armed with the scepter and girt with the girdle of power over mankind, rules over all the people of Bel; the mighty Prince whose praise is blazoned forth among the Kings: the exalted sovereign, whose servants Ashur has appointed to the government of the country of the four regions … The conquering hero, the terror of whose name has overwhelmed all regions.6

Of course, not all societies pursued the same path.7 On the fairly isolated continent of Australia, in southern Africa, in the Arctic, and in parts of the Americas, the hunter-gatherer way of life persisted until Europeans arrived with guns and Bibles; and horticultural Big Man and chiefdom societies thrived on Pacific Islands, in parts of North and South America, and Africa. However, agriculturalists constantly sought to expand their territory. Hunter-gatherer and horticulturalist groups resisted; after all, who would want to live in a society so unequal as an archaic state? But they were no match militarily for full-time soldiers with the latest weapons. And so, when they came into contact with states, hunter-gatherers and horticulturists were usually defeated and absorbed. States rose and fell; sometimes, after the collapse of a civilization, kingship would give way temporarily to chiefdom. However, over the millennia, there would come to be little or no alternative to dependency on food from field crops and on managed herds of oxen and cattle, also fed at least partly on field crops.

Eventually, specialization and innovation would lead to two remarkable inventions—writing and money—which played pivotal roles in the creation of the modern world, and which we will discuss below. Meanwhile, throughout the development of civilization, an influential minority of society, with ever more tools available for the unequal exercise of power, were able to live in cities and pursue occupations at least one step removed from nature. As a result, society as a whole began to see the natural world more and more as a pile of resources to be plundered, rather than as the source of life itself.

Sidebar 10: Pandemics and the Evolution of States and Empires

Herding Cattle, Flogging Slaves: Power from Domestication

A complex or stratified human society (that is, one with full-time division of labor and differing levels of wealth and political power) can be thought of as an ecosystem. Within it, humans, because of their differing classes, roles, and occupations, can act, in effect, as different species. To the extent that some exploit others, we could say that some act as “predators,” others as “prey.”9

Prior to the emergence of agrarian states, human “predation” occurred primarily between groups, rather than within groups. When food was scarce, some groups raided other groups’ stores of food, often wounding or killing fellow humans in the process, and sometimes taking captives. Raids could also be organized to defend or expand hunting territories. Revenge raids or killings often followed. However, within groups, no one’s labor could be systematically exploited (for the moment I am not counting war captives as group members), so there was little internal “predation.” However, as states arose, ruling classes began both to extract wealth from peasants via taxes and also to treat them as inherently inferior beings. Rulers were, in this sense, “predators”; peasants and slaves were “prey.” The whole of history can be retold in these terms, illuminating the motives and methods of the powerful as they continually fleeced the relatively powerless. I’ll return to this line of thinking shortly.

However, at this point, a related metaphor—domestication—may be further illuminating. As humans settled down and began to domesticate animals, they likely applied some of the same techniques and attitudes they were using to tame sheep and goats to the project of enslaving other humans—another, more sinister form of “domestication.”10

We’ve already seen how a long process of human self-domestication may have led to the development of an array of new traits throughout the Pleistocene. But domestication also spread beyond humans in both deliberate and inadvertent ways, which are worth describing briefly. Roughly 40,000 to 15,000 years ago, somewhere on the Eurasian landmass, a nonhuman species—the wolf—became domesticated. The way this happened is a matter of speculation. Some scientists propose that humans captured wolf pups and kept them as pets. Others suggest this was another instance of self-domestication: perhaps the friendliest wolves gained a survival advantage from voluntary association with humans. Either way, the dog lineage gradually emerged, displaying all the traits of the domestication syndrome (reduced aggression, floppy ears, white patches of fur, and so on).

Later, when humans settled down to become farmers, the process of domesticating plant species became a deliberate preoccupation. People bred wheat with stems that wouldn’t shatter easily, so grain could be harvested on the stem rather than having to be picked up off the ground; they also selectively planted wheat that ripened all at once, again to make harvesting easier. Over centuries and millennia, people domesticated dozens of plants, including fig, flax, vetch, barley, pea, maize, and lentil. The process mostly just required selectively saving and replanting seeds from plants with desirable traits.

Domesticating animals was a somewhat different process, motivated by three distinct purposes. The first was to produce animals that would live with humans to provide companionship and various services—including the herding of other domesticates (dogs), rodent suppression (cats), and entertainment (chickens, bred at first primarily for cock fights).11 The second purpose was to modify prey animals (sheep, goats, cattle, water buffalo, yak, pig, reindeer, llama, alpaca, chicken, and turkey) to provide a docile and controllable food source. The third purpose was to tame large animals (horse, donkey, ox, camel) to provide motive power and transportation services.

The process of domestication of all these animals was a template that could be at least partly transferred to intrahuman relations. The order in time is fairly clear: animal domestication began before, or concurrent with, the development of social stratification and complexity—not after it.12

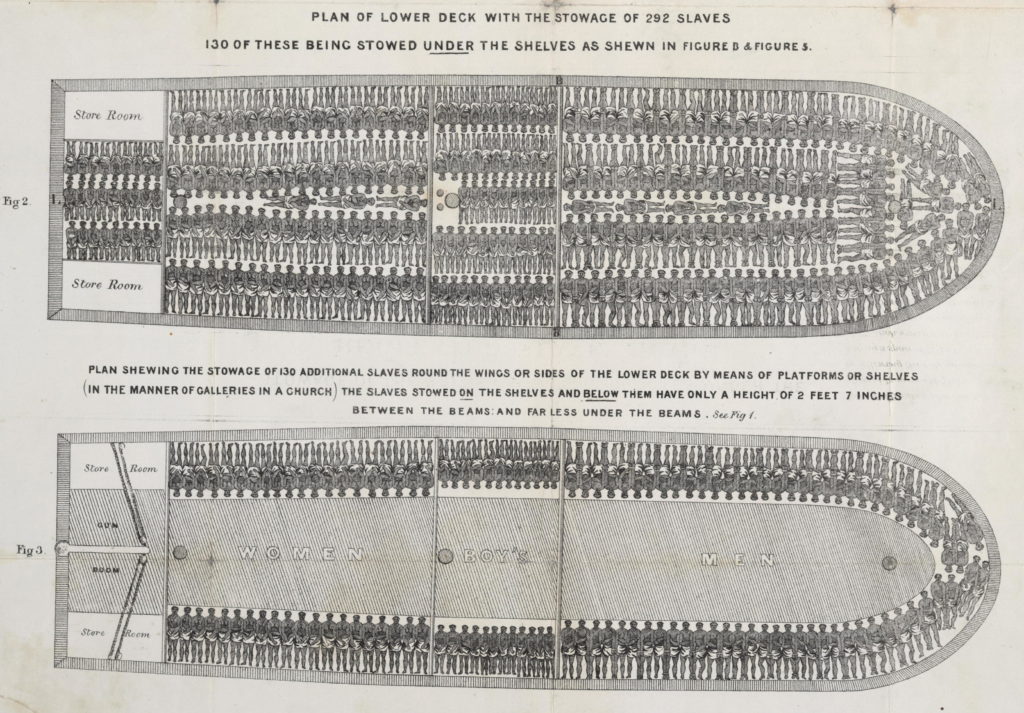

The steps involved in domesticating animals include capturing the animals, restraining or confining them, feeding them, accustoming them to human presence and to confinement, and controlling their reproduction in order to promote the traits wanted and discourage traits not wanted. Human slaves were similarly confined, fed, and forcibly accustomed to their new role and status. While the process of animal domestication centered on the deliberate genetic modification of the animal, human “domesticators” didn’t succeed in genetically altering their human “domesticates” (that’s the main reason human-on-human “domestication” is only metaphoric, not literal). Experiments have shown that it typically takes 50 generations or more of selective breeding to domesticate a wild animal such as a fox. Humans take longer than most other animals to reach reproductive age; thus, it would have been difficult to impossible for a restricted breeding group of humans to be kept enslaved long enough for genetic changes from selective breeding to appear—if selective breeding efforts were even undertaken.13 Nevertheless, the enslaving or colonizing of human groups with obvious differences in skin color or other physical, genetically-based characteristics would only feed attitudes of superiority among the slavers and colonizers—especially much later in history, during the European-organized African slave trade beginning in the 15th century.

Anthropologist Tim Ingold argues that hunter-gatherers regard animals as their equals, while animal-herding tribes and agriculturalists tend to treat animals as property to be mastered and controlled.14 According to many historians and archaeologists, cattle constituted the first form of property and wealth. Archaeologist Guillermo Algaze at the University of California in San Diego finds that the first city-states in Mesopotamia were built on the principle of transferring methods of control from animals to fellow humans: scribes employed the same categories to describe captives and temple workers as they used to count state-owned cattle.15

Sidebar 11: Slavery and Power

Instances of slavery appeared sometimes among complex horticultural societies, including pre-Columbian cultures in North, Central, and South America. However, with the advent of states and kings, slavery became universal, notably in the civilizations of Mesopotamia, Egypt, Greece, Rome, Persia, India, and China. Slavery in many ways epitomizes power inequality in human relationships. We will have more to say about slavery in Chapter 6, where we will explore the abolition of slavery as an example and template of humanity’s ability to rein in excessive power.

Women’s and children’s status saw a marked deterioration during the shift from horticulture to agriculture. As we’ve seen, women in hunter-gatherer societies generally enjoyed full autonomy (though societies did vary somewhat in this regard); they did in most horticultural societies as well, and many of these societies were matrilineal or matrifocal. Hunter-gatherers treated their children indulgently, regarding them as autonomous individuals whose desires should not be thwarted, even allowing them to play with sharp knives, hot pots, and fires. As a result, children tended to develop self-reliance and precocious social skills (as well as scars from cuts and burns). But, in farming communities, plowing was largely men’s work, and men became owners of land and animals. People were the wealth of the state, and states were continually losing population to war, disease, and desertion, so women were encouraged to have as many children as was physically possible. Women’s realm of work became clearly separated from that of men, and was restricted to household toil and child rearing. The authors of the Bible, like most other literate men in archaic kingdoms, regarded women and children as chattel, defined as property other than real estate (the word’s origin is tied to the word cattle). Children were put to work as soon as they were able to perform useful tasks. Metaphorically, women and children were household human “domesticates.”

Domesticated non-human animals can be said to have benefitted from their relationship with humans in some ways: by losing their freedom, they gained protection, a stable supply of food, and the opportunity to spread their population across a wider geographic range (as for plants, Michael Pollan discusses the advantages they reaped from domestication in his popular book The Botany of Desire: A Plant’s Eye View of the World). “Domesticated” humans didn’t get such a good deal, and there is plenty of evidence that they fought back or escaped when possible, as we’ll see in a little more detail in Chapter 6.

As ideologies increasingly justified the power of upper classes over lower classes, and masters over slaves, psychological reinforcement of “domestication” spread, in many cases, even to those being “domesticated.” Masters restricted slaves’ knowledge of the world and tamped down their aspirations, encouraging them to accept their condition as proper and even divinely ordained.

Predation could be said to foster its own pattern of thought. Predators (non-human and human) do not appear to view their prey with much compassion; predation is a game to be played and enjoyed. If orcas and cougars could speak, they might echo the typical words of the professional bill collector: “It’s nothing personal; it’s just business.” The psychology of domestication follows suit: sheep, cattle, pigs, and poultry are often viewed simply as commodities, rather than as conscious beings with intention, imagination, and feeling. For a family living on a farm, daily proximity with livestock entails getting to know individual animals, which may earn sympathy and respect (especially from children). But when any animal comes to be seen as food, compassion tends to be attenuated. Attitudes fostered by human-on-human “predation” and “domestication” likewise generated psychological distance.

As noted earlier, domestication wasn’t always motivated by the desire for food. Some animal domesticates were pets, which provided affection, companionship, amusement, and beauty. In class-divided states, rulers developed similar relationships with “pet” artists, musicians, and other creative people, some of whom were war captives or slaves, as well as concubines and sex slaves, who similarly supplied companionship, beauty, and amusement. Today, many people lavish extraordinary care on their pets, as ancient Egyptians did with their cats. Likewise, society heaps attention and riches on popular musicians, actors, artists, athletes, models, and writers.

Predator-prey relationships are essential to healthy ecosystems. Are human “predator-prey” relationships similarly essential to complex human societies? Political idealists have replied with a resounding “no,” though the evidence is debated. Non-hierarchical, cooperative institutions, such as cooperative businesses, are numerous and successful in the world today. Further, some modern societies feature much lower levels of wealth inequality than do others. Clearly, humans are able to turn “predation” into cooperation, shifting vertical power at least partly back to horizontal power—a point to which we will return in chapters 6 and 7. Nonetheless, in the world currently, inequality and “predation” remain facts of life in every society.

Stories of Our Ancestors: Religion and Power

In discussing the origins of language in Chapter 2, I suggested that our species’ earliest use of words led to telling stories and asking existential questions, which in turn led to myths and belief in supernatural beings. Perhaps the most fateful new words in any nascent human vocabulary were why, how, and what. Why does night follow day? How did the rivers and mountains come to be? What happens to us when we die? Such questions were often answered with origin stories that were retold and re-enacted in rituals that entertained, united, and sustained communities from generation to generation.

While it was seeding minds with metaphysical questions, language was also driving a wedge between the left and right hemispheres of the human brain. Psychologist Julian Jaynes argued in his influential book The Origin of Consciousness in the Breakdown of the Bicameral Mind (1976) that, in the ancient world, the less-verbal right brain’s attempts to be heard were interpreted by the more-linguistic left brain as the voices of gods and spirits. Today, neurologists understand that injuries or seizures to the brain’s left temporal lobe can trigger hallucinations of the “divine.”21

Among hunter-gatherer peoples, the specialist in matters of the sacred was the shaman (the word comes from Indigenous peoples of Siberia, but it denotes a nearly universal role in pre-agricultural societies). The shaman had a talent for entering altered states of consciousness, and giving voice to the spirits of the dead and of nature. Shamans treated individual and collective ills by intervening in the spirit world on behalf of the living, and by mending souls through cathartic ritual.

The subjective world of people in hunter-gatherer societies was magical—in a very specific sense of the word. Today, we usually think of magic as sleight-of-hand trickery, but in the minds of people in foraging and horticultural societies it was a technology of the imagination often involving the use of psychedelic plants and fungi, a set of practices to temporarily reconnect the brain hemispheres (though people didn’t think in such terms), and a way of exerting power in the realm of spirits. The spirit realm (Aboriginal Australians called it the Dreamtime) was timeless and malleable, the place of all beginnings and endings.

Calling the spiritual practices of hunters and gatherers “religions” confuses more than it clarifies. Shamanic spirituality had little in common with the universal religions with which we are familiar today—such as Christianity and Islam—which we will discuss shortly. The latter constitute a moralizing force in mass societies and feature Big Gods that watch over us 24/7, whereas the nature spirits of shamanic cultures had personalities and foibles like humans. But they also had power, and the purpose of contacting them in organized rituals was to access that power.

The spiritual beliefs and practices of horticultural societies remained magical, but focused more on the totem (an Ojibwe term that many anthropologists have generalized to refer to any spirit being, animal, sacred object, or symbol that serves as an emblem for a clan or tribe). Seasonal ceremonies Became grand affairs that were attended by many clans or tribes, and presided over by ceremonial chiefs. Sacrifice—including human sacrifice and ritual torture—appeared in complex horticultural societies, but became far more common in early states.

With the advent of the state, kings, and agriculture, we see the emergence of gods and goddesses—personalities separate from the human sphere who are to be worshiped with prayer and sacrifice. Gods were typically thought of as hierarchically organized, with a high god at the apex of a pyramid of divine power. The king claimed either to be an embodiment of the high god, or to have a special and direct avenue of communication; this was the justification for his wielding absolute social power among fellow humans. Rituals and ceremonies became grand affairs, taking place in monumental plazas and temples, and were presided over by a numerous, socially elite, and hierarchically organized priestly class.22

The trend is unmistakable: as societies became larger, sacred presences became more abstracted from nature and from people’s immediate surroundings; people’s attitude toward them turned toward worship and sacrifice; and spiritual intermediaries became organized into hierarchies of full-time professionals.

We’ve already seen that societies became bigger in order to become more powerful—to resist raids or invasions and to be able to seize people and wealth from surrounding societies. But now the biggest societies had reached a critical stage in terms of scale. They were already unstable: kingdoms were always vulnerable to economic cycles, and thus also to revolutions, civil wars, and epidemics. Yet, after the state, as a type of social entity, had existed for at least a couple of thousand years, with individual kingdoms rising and collapsing frequently, still another shift in scale became necessary.

Roughly 3,000 years ago, after the first written documents had appeared, new military challengers began to menace states along an arc running from the Mediterranean Sea all the way to China. Animal-herding tribes inhabiting the great Eurasian Steppe, stretching from modern Ukraine to Manchuria, began using the horse (domesticated around 5,500 years ago) in warfare. Two horses could be hitched to a small cart, carrying a driver and one or two archers. This simple vehicle, the chariot, was the most important military innovation in the ancient world. Adding to this advantage, the tribes of the Steppe later invented saddles, and supplemented chariots with cavalry—horse-riding warriors. Foot soldiers were simply no match for the deadly mobility of chariots and cavalry, and early states fell one by one to invaders from the Steppe.

There were only two effective defensive strategies that early states could muster. The first was to improve their own military technology by acquiring horses from the Steppe dwellers, adopting chariots and cavalry, and developing better armor to deflect the arrows of mobile archers. The second was to increase the scale of society still further, so as to recruit bigger armies and pay for them with a bigger tax base. With enough tax revenues, it would even be possible to build long and high walls to keep the invaders out. Kingdoms became empires, encompassing large geographic areas and many disparate cultures and languages. One of the first, the Achaemenid Persian Empire, lasted from 550 to 330 BCE and had 25 to 30 million subjects. But sheer size made these early empires even less internally stable than the archaic kingdoms that preceded them. They were nations of strangers—who, in many instances, couldn’t even understand one another’s speech. How to bind all these people together? 23

Sidebar 12: DNA Evidence for Steppe Invasions

Cultural evolution found a solution, and it hinged on religion. Recall that cultural evolution requires cultural variation and competition. As the old palace-and-temple organization of social life was challenged by the political disruptions of empire-building, new religions were appearing everywhere throughout the ancient world, thus providing a significant source of cultural variation. For example, monotheism cropped up as a religious “mutation” in Egypt 3,300 years ago when Pharaoh Amenhotep IV changed his name to Akhenaten and instituted the worship of Aten, the Sun God, and banned the worship of the traditional pantheon of Egyptian deities. It was an unsuccessful innovation: after Akhenaten’s death, Egypt reverted to its old polytheism and efforts were made to erase all traces of Aten and Akhenaten from stone inscriptions and monuments. However, in succeeding centuries other similar religious variations appeared and some succeeded spectacularly well, attracting millions of followers and persisting for many centuries.

How did the new religions differ from what went before, and how did they help promote the competitive success and durability of empires? The most successful new religions featured Big Gods who were often universal—that is, they transcended cultural differences.24 Because they weren’t tied to a specific cultural context, universal religions could seek converts anywhere and everywhere (Christianity, Islam, and Buddhism would become missionary religions, in that they actively sought converts; Judaism didn’t take that route, and, while it still constitutes a Big God religion, it doesn’t have nearly as many adherents).

Even more importantly, the Big Gods were supernatural monitors who watched over everyone continually, spotting any immoral behavior. This internal divine surveillance proved to be exactly what was needed to guarantee trust among believers everywhere. And trust had many practical implications. For example, trade demanded trust between merchants. If all were believers in the same Big God, levels of trust increased significantly.

The new religions also often featured the notion of hell, or divine punishment, for those whose behavior was bad (antisocial), and heaven, or future rewards, for those whose actions were good (prosocial). Hell was effective at discouraging people from leaving the religion, while heaven was more effective in winning converts. Heaven was also a useful foil for the problem of economic inequality: it promised that, after a few brief years of poverty and suffering here on Earth, the good would enjoy an eternity of bliss. In the postmortem worlds, rich and poor would be re-sorted according to their inner virtues, with the righteous servant reaping a far better reward than the sinful master. As songwriter and union organizer Joe Hill would later put it:

Long-haired preachers come out every night

Try to tell you what’s wrong and what’s right

But when asked how ’bout something to eat

They will answer in voices so sweetYou will eat, bye and bye

In that glorious land above the sky

Work and pray, live on hay

You’ll get pie in the sky when you die.25

Finally, Big God religions demanded personally costly displays of commitment. Such displays served to prove that individuals were not merely giving lip service to the religion, but could actually be trusted to maintain prosocial behavior. Such displays could include pilgrimages, public prayer, donations, fasting, occasional abstinence from sex or certain foods, and attendance at frequent scheduled worship events—or, in more extreme cases, self-flagellation, life-long celibacy, or voluntary poverty.

In his book The Evolution of God, journalist Robert Wright traces the story of how one quirky tribal war deity was gradually elevated to become a universal, moralizing, and all-surveilling Big God, the object of worship not just of all Jews, but Christians and Muslims as well. Moralizing Big Gods also appeared in China and India, but in the context of polytheistic religions. In all cases, these new religions, or new versions of old religions, possessed characteristics needed to ensure large-scale cooperation (supernatural monitoring, divine punishments and rewards, and costly displays of commitment).26

Several cultural evolution theorists, including Peter Turchin and psychologist Ara Norenzayan, have converged on the Big Gods explanation for the emergence of ultrasocial cooperation in what philosopher Karl Jaspers called the “Axial Age”—i.e., the turning point of human history, when empires and universal religions first appeared (roughly the eighth to the third century BCE).27 Their argument is based not just on historical research, but on hundreds of experiments in psychology, behavioral economics, and related fields. In his book Big Gods: How Religion Transformed Cooperation and Conflict, Norenzayan describes ingenious psychological experiments that have confirmed that the expectation of divine surveillance does indeed lead people to cheat less, to be more generous toward others, and to punish others for antisocial behavior even if doing so is personally costly. Experiments have also shown that the idea of hell is better than that of heaven at motivating prosocial behavior.

It’s important to remember that moral behavior was not a spiritual preoccupation previously. Among hunter-gatherers, spiritual practice had little or nothing to do with promoting prosocial actions. In those societies, everyone was constantly encouraged to share, while obnoxious individuals could simply be ostracized or killed. In archaic states, kings controlled their people’s behavior through state-sanctioned coercive power justified by proclamations issuing from the chief god by way of the king and the priesthood. In contrast, the Big God religions put a watcher inside each individual’s head. This was an economical and effective new way to control the behavior of millions of people who might have little else in common.

Of course, that’s not all the new religions did. Contemplation of the divine, and of the mythic biographies of the religions’ founders, gave individuals a sense of purpose and meaning. Religious art, architecture, and music expanded humanity’s access to, and appreciation of, beauty in many forms. Further, the Axial Age resulted in at least a partial reduction of inequality throughout most subsequent civilizations. Emperors, kings, and members of the aristocracy had to convert to the new religions in order to maintain their credibility; but once they did so, the ways they exerted power shifted. Emperor Ashoka of the Maurya Dynasty, in what is now India, ruled from about 268 to 232 BCE. Early in his reign, Ashoka pursued successful conquests, resulting in perhaps 100,000 deaths. But then he converted to Buddhism and spent the rest of his reign spreading Buddhist doctrine. Here is a passage from one of his edicts:

Beloved-of-the-Gods speaks thus. This Dhamma [i.e., universal truth] edict was written twenty-six years after my coronation. My magistrates are working among the people, among many hundreds of thousands of people. The hearing of petitions and the administration of justice has been left to them so that they can do their duties confidently and fearlessly and so that they can work for the welfare, happiness and benefit of the people in the country. But they should remember what causes happiness and sorrow, and being themselves devoted to the Dhamma, they should encourage the people in the country to do the same, that they may attain happiness in this world and the next.28

Compare Ashoka’s words with those of Assyrian king Tiglath Pilesar, quoted earlier. The archaic king Tiglath, unlike Ashoka, evidently wasn’t much concerned with happiness of his people, but much more so with their obsequious contributions to his own glory and vertical power. Nevertheless, it would be the empires that were devoted to happiness and cooperation that would win in the end. The maximum power principle was still at work in social evolution, but in a new and unexpected way: by moderating their power in the short run, rulers had found a way to stabilize and extend social power in the longer run. This is a pattern we’ll examine in more detail in Chapter 6.

Universal religions also had their dark side. The body count for Christianity alone runs into the tens of millions. Believers committed untold atrocities against non-believers as yet another way of demonstrating their commitment to the faith. The victims—primarily Indigenous peoples of the Americas, Africa, Australia, and the Pacific Islands—often faced the choice either to convert to a religion they could not understand, or risk torture, death, or enslavement. Whatever advantages they provided for believers, Big God religions were also instruments of vertical social power and domination vis-à-vis nonbelievers.

Sidebar 13: Justifying Colonialism

The universal religions were so successful at motivating cooperative behavior, gaining new adherents, and encouraging believers to have more children (as we’ll discuss in Chapter 5) that they have persisted and grown through the centuries right up to the present. Many empires based on Christianity or Islam (the Byzantine, Holy Roman, Ottoman, British, Spanish, French, Portuguese, and Russian Empires, to name just a few) rose and fell during the past two millennia, largely shaping world history. Today, 2.3 billion people call themselves Christians, 1.8 billion identify as Muslims, and half a billion practice Buddhism; altogether, these three missionary religions influence the behavior of nearly two-thirds of humanity.

Are Big Gods necessary to a moral society? Not necessarily. Atheists can be ultrasocial too, as we see in atheist-majority Scandinavian countries today. That observation opens the door to a discussion of the future of social power, which we’ll take up in chapters 6 and 7.

Tools for Wording: Communication Technologies31

In Chapter 2, I described tools as prosthetic extensions of the body, and language as the first soft technology. Bringing tools and language together, via communication technologies, enabled the emergence of a series of new pathways to social power. As we’ll see, the powers of communication technologies transformed societies, even playing a key role in the evolution of Big God religions.

Writing is the primary technology of language. The first true writing is believed to have appeared in Sumeria around 5,300 years ago, in the form of baked clay tablets inscribed with thousands of tiny triangular stick impressions (i.e., cuneiform writing). Earlier proto-writing took the form of jotted reminders that made sense primarily to the writer or to a small community; these scribbles weren’t capable of recording complete thoughts and in most cases cannot be deciphered by modern linguists. The cuneiform writing of Mesopotamia, in contrast, turned into a full symbolic representation of a human language, and through the texts that Sumerian scribes left behind we can glimpse the thoughts of people who lived thousands of years before us. Writing was invented independently about 4,000 years ago in China, and again in Mesoamerica by about 2,300 years ago; these writing systems achieved the same purpose as Sumerian cuneiform, but have almost nothing else in common with it.

The first written texts were inventories and government records produced in the archaic state of Sumeria by full-time scribes working for the king and the priesthood. Since only a tiny fraction of the populace was literate, there was no expectation that these records would be read by anyone outside the palace or the temple. But as the habit of writing took root, and as literacy spread, so did the usefulness and power of recording thoughts on clay or other media. This process took some time: several centuries separate the appearance of the first writing system and its use as anything other than a way of keeping track of grain yields, tax payments, numbers of cattle, and numbers of war captives.

The Epic of Gilgamesh, a Sumerian epic poem dating to almost 5,000 years ago, is widely regarded as the first piece of literature. It has a few themes and characters in common with the later Hebrew Bible, including Eden and Deluge narratives, with protagonists who resemble Adam, Eve, and Noah. Thus, the very first written story still resonates throughout much of humanity to this day.

Religious myths were probably transmitted orally for untold generations before being put into writing. But once recorded on clay, parchment, or papyrus, these stories took on a permanent and objective existence. Early religious texts were regarded as sacred objects, and achieved a durability and portability that assisted in the spread of faith and belief. This would be a key advantage in the case of the Big God missionary religions, as they sought converts over ever-wider geographic areas.

Writing conferred many practical advantages; “the power of the written word” is a phrase familiar to nearly everyone, and for good reason. Among other uses, writing enabled laws to be publicly codified. The Code of Hammurabi, written in 1754 BCE, was the world’s first law document, specifying wages for various occupations and punishments for various crimes, all graded on a social scale, depending on whether one was a slave or free person, male or female. In pre-state societies all such matters were decided by custom and consensus; with written laws, justice became less a matter for ad hoc community deliberation, and more one of adherence to objective standards. Writing also assisted enormously with commercial record keeping, thus facilitating trade. It even altered military strategy, as spies could send coded notes about enemy troop movements.

Like all technologies, writing changed the people who used it. Oral culture was rooted in memory, multisensory immersion, and community; in contrast, written culture is visual, abstract, and objectifying. Poet Gary Snyder tells of his journey, many years ago, in Australian Aboriginal country with a Pintupi elder named Jimmy Tjungurrayi. They were traveling at about 25 miles per hour in the back of a pickup truck, the elder reciting stories at breakneck speed, beginning a new one before finishing the last. Later Snyder realized that these stories were tied to specific places they had been passing. The stories were meant to be told while walking at a much slower speed, so the elder had to struggle to keep up. Aboriginal orally transmitted place-stories were a multidimensional map that included ancestral relations and events; intimate knowledge of specific land formations, plants, and animals; and myths of the Dreamtime. Writing can give us a more rational representation of a territory, one we can refer to at our own pace. But it cannot capture the immediate, full-sensory engagement with nature and community that oral culture maintained. And so, as we became literate, entire realms of shared human experience tended to atrophy.32

Early writing consisted of what were essentially little pictures, and it took years to learn to read and write with them (as it still does with Chinese ideograms). But an innovation in communication technology—the phonetic alphabet—made it possible to learn to read and write at a basic level in roughly 30 hours. With this ease of use came a further level of abstraction in human thinking.

Alphabetic writing originated about 4,000 years ago among Semitic peoples living in or near Egypt. It was used only sporadically for about 500 years until being officially adopted by Phoenician city-states that carried on large-scale maritime trade. It’s probably no historical accident that the alphabet first caught on with people who traded and moved about a great deal (other early alphabet adopters included the Hebrews, who were wandering desert herders; and the Greeks, who were traders like the Phoenicians). Alphabetic writing was more efficient than picture writing, and, because it was phonetic, could more readily record multiple languages, thereby making translation and trading easier. Significantly, for adherents of missionary Big God religions, the alphabet could aid in spreading the good word.

With its short roster of intrinsically meaningless letters, the alphabet could viralize literacy while speeding up trade and other interactions between cultures. It also led to a further disconnection of thought from the immediate senses (media theorist Marshall McLuhan called alphabet users “abced-minded”). Picture writing was already abstract, in that the animals, plants, and humans used as symbols were stylized and divorced from movement, sound, smell, and taste; but the alphabet was doubly abstract: even though its letters originated as pictures of oxen, fish, hands, and feet, this vestigial picture content was irrelevant to the meaning conveyed through words being written and read. To know that the letter “B” originated as a picture of a house tells us nothing about the meaning of words containing that letter.

Further innovations in communication technology happened much later, but brought equally profound social, economic, cultural, and psychological consequences.33 The printing of alphabetic languages with moveable type, originating with Johannes Gutenberg’s Bible in 1439, sowed the idea of interchangeable parts, and therefore of mass production.34 Printing was also a democratizing force in society: soon, nearly any literate person could afford a cheaply printed book, and so at least in principle anyone could gain access to the world’s preserved stores of higher knowledge. Literacy rates rose as increasing numbers of people took advantage of this opportunity. With printing, the expanding middle class could educate itself on a wide variety of subjects—from law to science to the daily churn of gossip and current events described in newspapers (the first of which appeared in Germany in 1605). A century after Gutenberg, printed Bibles underscored Martin Luther’s argument that the common people should have direct access to scripture translated into their own language. The printing press thus played a key role in the origination and spreading of Protestantism.

The telegraph, invented in the 19th century, enabled nearly instantaneous point-to-point transmission of words and numbers. News could travel in a flash, but it had to be reduced to a series of dots and dashes—an early form of binary (though not strictly digital) communication. This could lead to mistakes: when a telegrapher in Britain announced the discovery of a new element named “aluminium” to an American counterpart, the second “i” in the word wasn’t received and recorded; as a result, the element is still known in the United States as “aluminum.”

The patenting of the telephone by Alexander Graham Bell in 1876 led to instant two-way communication by voice. While printing was a static and visual medium, telephonic communication engaged the ear and required the full, immediate attention of users. This made the phone a source of rude interruption to the print oriented, but an enthralling lifeline to teenagers eager to chat with boyfriends and girlfriends.

The writing of music (i.e., musical notation, which originated in ancient Babylonia, was revived in the Middle Ages, and was perfected in the 17th century) exposed music to analysis and enabled the authorship and ownership of compositions. Analysis and authorship then created the incentive for the development of greater complexity and originality in music, leading to Bach, Beethoven and beyond. Sound recording (starting in the 1890s) made music almost effortlessly accessible at any time and exposed listeners to exotic musical styles. Now performances, as well as compositions, could be owned by musicians, record companies, and listeners. Recording led quickly to the jazz age, then to rock & roll, folk, country, rhythm & blues, disco, punk, grunge, hip hop, world music, electronic music, hypnagogic pop, and on and on, each generating its own cultural world of in-group fashion and lingo.

Meanwhile movies (which became popular in the 1910s) borrowed their content from printed novels and theatrical plays, and their style from still photography (invented in the 1820s). Films were, metaphorically, the projections of dreams. As such, they riveted the imaginations of moviegoers, creating a giant industry owned by powerful trend-setting moguls and populated by “stars” whom members of the public longed to know as intimately as their own family members.

Radio, like printing, could deliver a message to many people scattered widely, each in the privacy of their home; and like the telephone, it was auditory and instantaneous. But unlike the telephone, radio (which came into widespread use in the 1920s) would become primarily a one-way means of broadcasting voice and music. Because it was auditory, radio involved listeners in a more psychologically intensive way than print (McLuhan called radio the “tribal drum”). In the mid-20th century, millions gathered around their radio cabinets to listen raptly to political leaders like Franklin Roosevelt and Adolph Hitler—as well as preachers like Pastor Charles Fuller, comedians like Bob Hope and Gracie Allen, and musicians ranging from Ella Fitzgerald to Jascha Heifetz. Most radio programs featured commercials to generate revenue to pay for content. The power of radio thus produced both mass propaganda (it’s questionable whether fascism could have taken hold in Europe without radio) and mass advertising—signal developments in the political and economic history of power.

Television (widely adopted in the 1950s) brought a low-res version of the movie theater into nearly every home. Because the TV image was made up of thousands of blinking dots requiring unconscious neurological processes to make sense of them, it entrained the brain in a way that movies didn’t. Further, because it engaged viewers with both sound and image, and in their own homes, television tended to turn viewers into hyperpassive receivers of broadcast content. In America, for the first three decades of the television era, there were only four options for what to watch at any given time—programming on three commercial networks and one commercial-free educational network (other nations had even fewer choices). As a result of all these factors, television enormously enhanced the power of corporate advertisers—and, in authoritarian states, that of the government. Indeed, television may have been the most powerful technological means of shaping mass thought and behavior ever developed, until quite recently.

During the past three decades television has changed. High-definition LCD screens, the proliferation of cable networks, and the emergence of on-demand streaming services have altered TV’s fundamental nature. Today it is even more of a hybrid medium than film or early television. Not only does it include sight and sound at high levels of fidelity; not only does it present content derived from movies, print novels, theater, newspapers, radio sports and weather reports, and even comic books; but television now also incorporates elements of the computer, the internet, and social media. TV is a more democratized medium than it was formerly, in that it offers consumers more options. But it is also more socially and politically polarizing, as is the case with related new developments discussed below.

The internet-connected personal computer, which came into general use in the 1990s, was, like printing, a source of horizontal power for many society members. Anyone could become a publisher by creating a web page and writing a blog, or by posting videos. Anyone could open an online store. Meanwhile, the user could access enormous amounts of information about every imaginable topic via a search engine or Wikipedia. It’s too bad McLuhan wasn’t around to see it; he might have called the internet-connected computer a prosthetic extension of the brain itself.

Computers and digitization have led to shifts in human cognition and social organization that are still in progress; the same with smartphones and social media, which fashion an information world tailored to each individual’s set of expectations, interests, and prejudices. These devices and their software offer a portable, even wearable, and infinitely customizable portal into an immense digital world of news, entertainment, and personal communication with friends, relatives, and business contacts scattered across the world.

However, the newest communication technologies are means not just of mass expression but also of mass persuasion, in that they give their corporate managers opportunities for digital surveillance and marketing, and offer political actors (not only governments, but fringe groups of every stripe) means for the spread of precisely targeted propaganda often cleverly disguised as news. Digital face recognition, among other automated intelligence technologies, combined with the internet-connected computer and smartphone, supply governments with the means for nearly total information control. The real-life equivalent of Big Brother (the totalitarian leader in George Orwell’s prophetic dystopian novel 1984, published in 1948) now has the ability to know who you are, where you are, and what you are thinking and doing at almost any moment. He can manipulate your beliefs, desires, and fears.

In this briefly recounted history of communication technologies, one can’t help but note a steep acceleration of cultural change, and a correspondingly swift intensification and extension of social power. Each new communication technology has magnified the power of language (and images), and some of these technologies have given us vastly more access to information and knowledge. But each has also imposed costs and risks. At the end of the story we find ourselves enmeshed with communication technologies to such a degree that many people now use the word technology to refer solely to the very latest computerized communication devices and their software, ignoring millions of other tools and the tens of thousands of years during which we developed and used them. We are increasingly captured in the ever-shrinking now of unblinking digital awareness.

Numbers on Money

Economists typically describe money as a neutral medium of exchange. But, in reality, money is better thought of as quantifiable, storable, transferrable, and portable social power. I have defined social power as the ability to get other people to do something. With enough money, you can indeed get other people to do all sorts of things.

To understand money, it’s essential to know the context in which it emerged. Money facilitates trade, and in early societies trade was an adversarial activity undertaken primarily between strangers. Within small pre-state societies—that is, among people who knew one another—a gift economy prevailed. People simply shared what they had, in a web of mutual indebtedness. Trade was a tense exchange of goods in which men from different tribes sought to get the better of one another; if there was suspicion of cheating, someone could get hurt or killed. In these societies, cooperation was essential to everyone’s survival, so any intra-group activity that smacked of trade was unthinkable.

However, with the advent of the multi-ethnic state and full-time division of labor, trade necessarily began to occur within the broadened borders of society. In order to keep the process of exchange from eroding community solidarity and occasionally erupting into violence, the state created and regulated markets. The palace and the temple made laws specifying where and when markets could operate, and set the terms on which sellers should extend credit to buyers. The state also created and enforced standard units of measure.

Credit (i.e., debt) and money arose simultaneously within this milieu, and were, and are, two sides of the same metaphorical coin. As anthropologist David Graeber recounts in his illuminating book Debt: The First 5,000 Years, you can’t have one without the other.35 Debt and money have co-evolved through the millennia, with each unit of any currency implying an obligation on someone’s part to pay or repay a similar or greater amount, in the forms of money, goods, or labor.

Money also co-evolved with number systems, arithmetic, and geometry. Keeping track of money and credit (and the stuff they bought—often cattle or land) required numbers and the ability to manipulate them. Counting, the earliest evidence of which is preserved in tally marks on animal bones, began long before verbal writing, perhaps up to 40,000 years ago. By 6,000 years ago, people in the Aagros region of Iran were keeping track of sheep using clay tokens. Cities within the archaic state of Sumeria each had their own local numeral systems, used mostly in accounting for land, animals, and slaves. Geometry evolved for the purpose of surveying land. As trade expanded, so did numeracy, and so did the sophistication of mathematics. Numbers and their manipulation would, of course, eventually give humanity impressive powers in other contexts—primarily in science and engineering, where they would enable the discovery of exoplanets, the sequencing of the human genome, and a plethora of other marvels.

The earliest forms of money consisted of anything from sheep to shells, but eventually silver and gold emerged as the most practical, universally accepted mediums of exchange—while also serving as measures and stores of value. However, in early states, ingots or coins themselves were not typically traded. Instead, traders kept credit accounts using ledgers (at first on clay tablets; traders in later societies would use paper books or, most recently, computer hard drives). Precious metals were stored—often more-or-less permanently—in the palace treasury by the state as an ultimate guarantee of the state’s ability to regulate trade, especially with other states. Later on, gold and silver coins came into more common use by merchants and nobles, but most peasants still had little contact with them, conducting whatever business they engaged in using grain, domesticated animals, and credit, with money serving mainly as a measure of their indebtedness.